The field of artificial intelligence (AI) has grown rapidly in recent times, leading to the development of deep learning algorithms. With the launch of AI tools such as DALL-E and OpenAI, deep learning has emerged as a key area of research. However, with an abundance of available algorithms, it can be difficult to know which ones are the most crucial to understand.

Dive into the fascinating world of deep learning and explore the top, must-know algorithms crucial to understanding artificial intelligence.

1. Convolutional Neural Networks (CNNs)

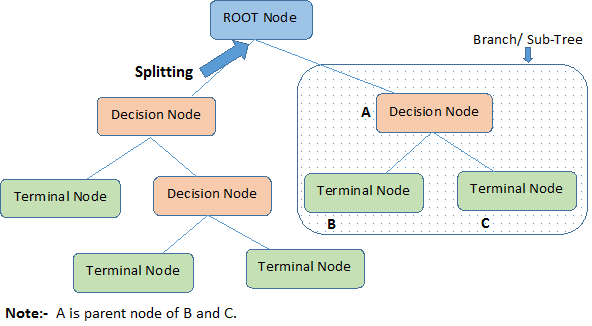

Convolutional Neural Networks (CNNs), also known as ConvNets, are neural networks that excel at object detection, image recognition, and segmentation. They use multiple layers to extract features from the available data. CNNs mainly consist of four layers:

- Convolution layer

- Rectified Linear Unit (ReLU)

- Pooling Layer

- Fully Connected Layer

These four layers provide a working mechanism for the network. The convolution layer is the first layer in CNNs, which filters out complex features from the data. Then, the ReLU maps data to train the network. After that, the process sends the map to the pooling layer, which reduces sampling, and converts the data from 2D to a linear array. Finally, the fully connected layer forms a flattened linear matrix used as input to detect images or other data types.

2. Deep Belief Networks

Deep Belief Networks (DBNs) are another popular architecture for deep learning that allows the network to learn patterns in data with artificial intelligence features. They are ideal for tasks such as face recognition software and image feature detection.

The DBN mechanism involves different layers of Restricted Boltzmann Machines (RBM), which is an artificial neural network that helps in learning and recognizing patterns. The layers of DBN follow the top-down approach, allowing communication throughout the system, and the RBM layers provide a robust structure that can classify data based on different categories.

3. Recurrent Neural Networks (RNNs)

Recurrent Neural Network (RNN) is a popular deep learning algorithm with a wide range of applications. The network is best known for its ability to process sequential data and design language models. It can learn patterns and predict outcomes without mentioning them in the code. For example, the Google search engine uses RNN to auto-complete searches by predicting relevant searches.

The network works with interconnected node layers that help memorize and process input sequences. It can then work through those sequences to automatically predict possible outcomes. Additionally, RNNs can learn from prior inputs, allowing them to evolve with more exposure. Therefore, RNNs are ideal for language modeling and sequential modeling.

4. Long Short Term Memory Networks (LSTMs)

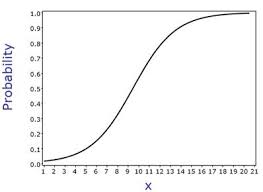

Long Short Term Memory Networks (LSTMs) are a Recurrent Neural Network (RNN) type that differs from others in their ability to work with long-term data. They have exceptional memory and predictive capabilities, making LSTMs ideal for applications like time series predictions, natural language processing (NLP), speech recognition, and music composition.

LSTM networks consist of memory blocks arranged in a chain-like structure. These blocks store relevant information and data that may inform the network in the future while removing any unnecessary data to remain efficient.

During data processing, the LSTM changes cell states. First, it removes irrelevant data through the sigmoid layer. Then it processes new data, evaluates necessary parts, and replaces the previous irrelevant data with the new data. Finally, it determines the output based on the current cell state that has filtered data.

The ability to handle long-term data sets LSTMs apart from other RNNs, making them ideal for applications that require such capabilities.

5. Generative Adversarial Networks

Generative Adversarial Networks (GANs) are a type of deep learning algorithm that supports generative AI. They are capable of unsupervised learning and can generate results on their own by training through specific datasets to create new data instances.

The GAN model consists of two key elements: a generator and a discriminator. The generator is trained to create fake data based on its learning. In contrast, the discriminator is trained to check the output for any fake data or errors and rectify the model based on it.

GANs are widely used for image generation, such as enhancing the graphics quality in video games. They are also useful for enhancing astronomical images, simulating gravitational lenses, and generating videos. GANs remain a popular research topic in the AI community, as their potential applications are vast and varied.

6. Multilayer Perceptrons

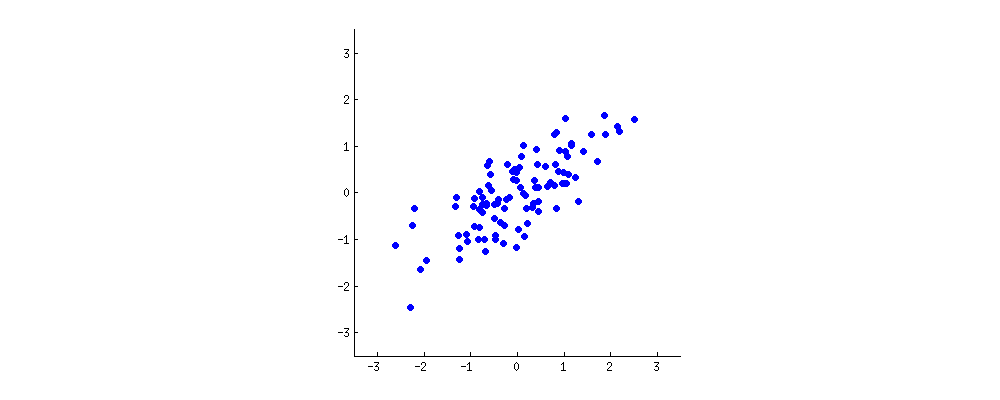

Multilayer Perceptron (MLP) is another deep learning algorithm, which is also a neural network with interconnected nodes in multiple layers. MLP maintains a single data flow dimension from input to output, which is known as feedforward. It is commonly used for object classification and regression tasks.

The structure of MLP involves multiple input and output layers, along with several hidden layers, to perform filtering tasks. Each layer contains multiple neurons that are interconnected with each other, even across layers. The data is initially fed to the input layer, from where it progresses through the network.

The hidden layers play a significant role by activating functions like ReLUs, sigmoid, and tanh. Subsequently, it processes the data and generates an output on the output layer.

This simple yet effective model is useful for speech and video recognition and translation software. MLPs have gained popularity due to their straightforward design and ease of implementation in various domains.

7. Autoencoders

Autoencoders are a type of deep learning algorithm used for unsupervised learning. It’s a feedforward model with a one-directional data flow, similar to MLP. Autoencoders are fed with input and modify it to create an output, which can be useful for language translation and image processing.

The model consists of three components: the encoder, the code, and the decoder. They encode the input, resize it into smaller units, then decode it to generate a modified version. This algorithm can be applied in various fields, such as computer vision, natural language processing, and recommendation systems.

Choosing the Right Deep Learning Algorithm

To select the appropriate deep learning approach, it is crucial to consider the nature of the data, the problem at hand, and the desired outcome. By understanding each algorithm’s fundamental principles and capabilities, you can make informed decisions.

Choosing the right algorithm can make all the difference in the success of a project. It is an essential step toward building effective deep learning models.