Machine learning is the science of enabling computers to function without being programmed to do so.

This branch of artificial intelligence can enable systems to identify patterns in data, make decisions, and predict future outcomes. Machine learning can help companies determine the products you’re most likely to buy and even the online content you’re most likely to consume and enjoy.

Machine learning makes it easier to analyze and interpret massive amounts of data, which would otherwise take decades or even an eternity for humans to decode.

In effect, machine learning is an attempt to teach computers to think, learn, and act like humans. Thanks to increasing internet speeds, advancements in storage technology, and expanding computational power, machine learning has exponentially advanced and become an integral part of almost every industry.

What is machine learning?

Machine learning (ML) is a branch of artificial intelligence (AI) that focuses on building applications that can automatically and periodically learn and improve from experience without being explicitly programmed.

With the backing of machine learning, applications become more accurate at decision-making and predicting outcomes. As a matter of fact, machine learning is responsible for the majority of advancements in the field of artificial intelligence and is an integral part of data science.

By granting computers the ability to learn and improve, they can solve real-world problems without being specifically instructed to do so. For that, machine learning algorithms are trained to perform pattern recognition in vast amounts of data or big data.

Recommendation systems are one of the most common applications of machine learning. Companies like Google, Netflix, and Amazon use machine learning to understand preferences better and use the information to recommend products and services.

The emphasis here is on leveraging data. By implementing statistics on vast volumes of data, machine learning algorithms can find patterns and use these patterns to make predictions. In other words, these algorithms can utilize historical data as input and predict new output values.

Collecting data is easy. But analyzing and making sense of vast volumes of data is the hardest part. That’s where machine learning makes all the difference. If a specific dataset can be digitally stored, it can be fed into an ML algorithm and processed to gain valuable insights.

Machine learning vs. traditional programming

Traditional software applications have a narrower scope. They depend on explicit instructions from humans to work and can’t think for themselves. These specific instructions could be something like ‘if you see X, then perform Y’.

Machine learning, on the other hand, doesn’t require any explicit instruction to function. Instead, you give an application the essential data and tools needed to study a problem and it will solve it without being told what to do. Additionally, you also provide the application the ability to remember what it did so that it can learn, adapt, and improve periodically – similar to humans.

If you’re going with traditional programming and by the ‘if X then Y’ route, then things can get messy.

Suppose you create a spam detection application that deletes all spammy emails. To identify such emails, you explicitly instruct the application to look for terms like “earn,” “free,” and “zero investment”.

A spammer can easily manipulate the system by choosing synonyms of these terms or replacing certain characters with numbers. The spam detection application will also come across numerous false positives, such as when your friend sends a genuine email containing a code for free movie tickets.

Such limitations can be eliminated by machine learning. Instead of inputting instructions, machine learning requires data to learn and understand what a malicious email would look like. By learning by example (not instructions), the application gets better with time and can detect and delete spam messages more accurately.

Still not convinced why machine learning is a godsend technology?

Here are some situations where machine learning becomes invaluable:

- If the rules of a particular task continually change, for example, in the case of fraud detection, traditional applications will break, but machine learning can handle the variations.

- In the case of image recognition, the rules are too complex to be hand-written. Also, it’s virtually impossible for a human to code every distinction and feature into the application. A machine learning algorithm can learn to identify these features by analyzing huge volumes of image data.

- A traditional application will falter if the nature of the data it processes changes. In the case of demand forecasting or predicting upcoming trends, the type of data might frequently change, and a machine learning application can adapt with ease.

Want to learn more about Machine Learning Software? Explore Machine Learning products.

A brief history of machine learning

Machine learning has been around for quite some time. It’s easy to tell that because computers are rarely referred to as “machines” anymore. Here’s a quick look at the evolution of machine learning from inception to realization.

Pre-1920s: Thomas Bayes, Andrey Markov, Adrien-Marie Legendre, and other acclaimed mathematicians lay the necessary groundwork for the foundational machine learning techniques.

1943: The first mathematical model of neural networks is presented in a scientific paper by Walter Pitts and Warren McCulloch.

1949: The Organization of Behavior, a book by Donald Hebb, is published. This book explores how behavior relates to brain activity and neural networks.

1950: Alan Turing tries to describe artificial intelligence and questions whether machines have the capabilities to learn.

1951: Marvin Minsky and Dean Edmonds built the very first artificial neural network.

1956: John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon organized the Dartmouth Workshop. The event is often referred to as the “birthplace of AI,” and the term “artificial intelligence” was coined in the same event.

1965: Alexey (Oleksii) Ivakhnenko and Valentin Lapa developed the first multi-layer perceptron. Ivakhnenko is often regarded as the father of deep learning (a subset of machine learning).

1967: The nearest neighbor algorithm is conceived.

1979: Computer scientist Kunihiko Fukushima published his work on neocognitron: a hierarchical multilayered network used to detect patterns. Neocognitron also inspired convolutional neural networks (CNNs).

1985: Terrence Sejnowski invents NETtalk. This program learns to pronounce (English) words the same way babies do.

1995: Tin Kam Ho introduces random decision forests in a paper.

1997: Deep Blue, the IBM chess computer, beats Garry Kasparov, the world champion in chess.

2000: The term “deep learning” was first mentioned by neural networks researcher Igor Aizenberg.

2009: ImageNet, a large image database extensively used for visual object recognition research, is launched by Fei-Fei Li.

2011: Google’s X Lab developed Google Brain, an artificial intelligence algorithm. Later this year, IBM Watson beat human competitors on the trivia game show Jeopardy!.

2014: Ian Goodfellow and his colleagues develop a generative adversarial network (GAN). The same year, Facebook developed DeepFace. It’s a deep learning facial recognition system that can spot human faces in images with nearly 97.25% accuracy. Later, Google introduces a large-scale machine learning system called Sibyl to the public.

2015: AlphaGo becomes the first AI to beat a professional player at Go.

2020: Open AI announces GPT-3, a robust natural language processing algorithm with the ability to generate human-like text.

How does machine learning work?

At its heart, machine learning algorithms analyze and identify patterns from datasets and use this information to make better predictions on new data sets.

It’s similar to how humans learn and improve. Whenever we make a decision, we consider our past experiences to assess the situation better. A machine learning model does the same by analyzing historical data to make predictions or decisions. After all, machine learning is an AI application that enables machines to self-learn from data.

To get a simple understanding of how machine learning works, imagine how you would learn to play the dinosaur game – a game you would’ve come across only if you use Google Chrome and have an unreliable internet connection.

The game will end only after 17 million years of playtime (the approximate number of years the game character, the T-Rex dinosaur, existed before they went extinct). So finishing the game is out of the question.

In case you haven’t played the game before, you have to jump whenever the T-Rex encounters a cactus plant and jump or duck whenever it encounters a bird.

As a human, you would use the trial and error method to learn how to play the game. By playing the game a couple of times, you could easily understand that to not lose, you need to avoid running into the cactus or the bird.

An AI application would also learn almost similarly. A developer could specify in the application’s code to jump 1/20th of the time whenever it encounters a dense area of dark pixels. If the particular action reduced the chances of losing, it could be increased to jump 1/10th of the time. By playing more and encountering more obstacles, the application could predict when to jump or duck.

More precisely, the application would continually collect data regarding actions, environment, and outcomes. The collected data is usually used to develop a graph. After many trials and errors, the AI could plot a graph that could help predict the most suitable action: jump or duck.

Here’s another example.

Consider the following sequence.

- 3 – 9

- 4 – 16

- 5 – 25

So if you were given the number 6, which number would you pick so that the pair would match the above sequence?

If you concluded that it’s 36, how did you do it?

You probably analyzed the previous data (historical data) and “predicted” the number with the highest probability. A machine learning model is no different. It learns from experience and uses the accumulated information to make better predictions.

In essence, machine learning is pure math. Any and every machine learning algorithm is built around a mathematical function that can be modified. This also means that the learning process in machine learning is also based on mathematics.

4 types of machine learning methods

There are numerous machine learning methods by which AI systems can learn from data. These methods are categorized based on the nature of data (labeled or unlabeled) and the results you anticipate. Generally, there are four types of machine learning: supervised, unsupervised, semi-supervised, and reinforcement learning.

1. Supervised learning

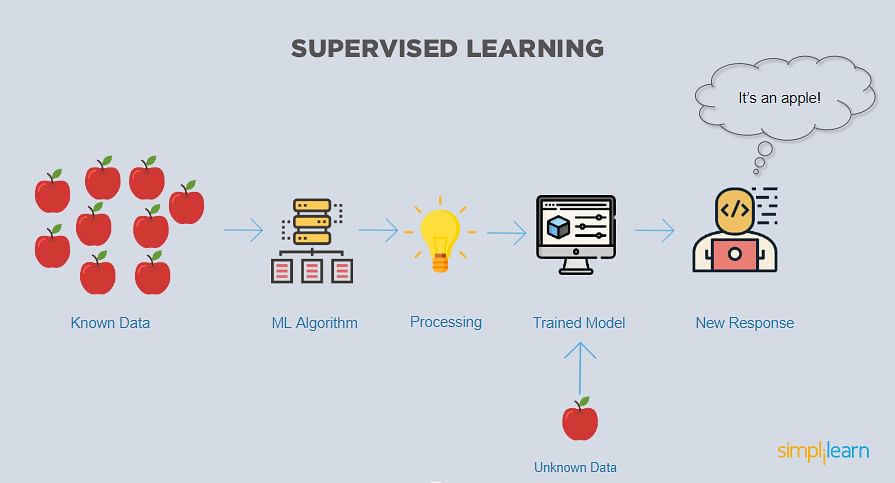

Supervised learning is a machine learning approach in which a data scientist acts like a tutor and trains the AI system by feeding basic rules and labeled datasets. The datasets will include labeled input data and expected output results. In this machine learning method, the system is explicitly told what to look for in the input data.

In simpler terms, supervised learning algorithms learn by example. Such examples are collectively referred to as training data. Once a machine learning model is trained using the training dataset, it’s given the test data to determine the model’s accuracy.

Supervised learning can be further classified into two types: classification and regression.

2. Unsupervised learning

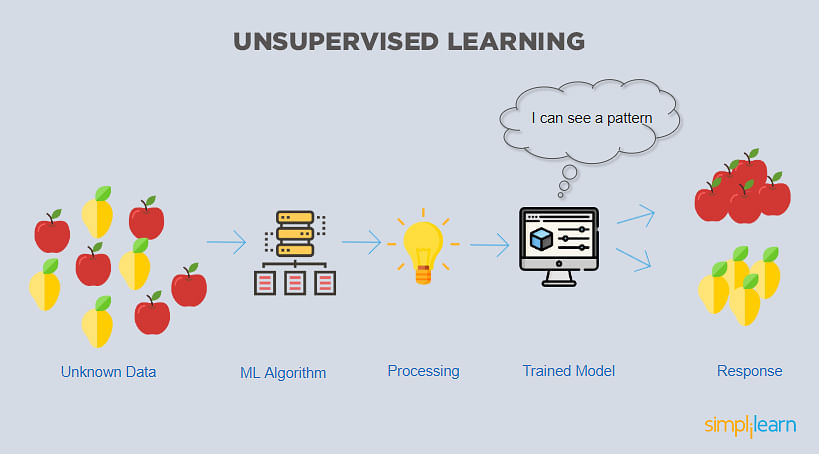

Unsupervised learning is a machine learning technique in which the data scientist lets the AI system learn by observing. The training dataset will contain only the input data and no corresponding output data.

When compared to supervised learning, this machine learning method requires massive amounts of unlabeled data to observe, find patterns, and learn. Unsupervised learning could be a goal in itself, for example, discovering hidden patterns in datasets or a method for feature learning.

Unsupervised learning problems are generally grouped into clustering and association problems.

3. Semi-supervised learning

Semi-supervised learning is an amalgam of supervised and unsupervised learning. In this machine learning process, the data scientist trains the system just a little bit so that it gets a high-level overview.

Also, a small percentage of the training data will be labeled, and the remaining will be unlabeled. Unlike supervised learning, this learning method demands the system to learn the rules and strategy by observing patterns in the dataset.

Semi-supervised learning is beneficial when you don’t have enough labeled data, or the labeling process is expensive, but you want to create an accurate machine learning model.

4. Reinforcement learning

Reinforcement learning (RL) is a learning technique that allows an AI system to learn in an interactive environment. A programmer will use a reward-penalty approach to teach the system, enabling it to learn by trial and error and receiving feedback from its own actions.

Simply put, in reinforcement learning, the AI system will face a game-like situation in which it has to maximize the reward.

Although the programmer defines the game rules, the individual doesn’t provide any hints on how to solve or win the game. The system must find its way by making numerous random trials and learn to improve from each step.

Uses of machine learning

It’s safe to say that machine learning has impacted almost every field that underwent a digital transformation. This branch of artificial intelligence has immense potential when it comes to task automation, and its predictive capabilities are saving lives in the healthcare industry.

Here are some of the many use cases of machine learning.

Image recognition

Machines are getting better at processing images. In fact, machine learning models are better and faster in recognizing and classifying images than humans.

This application of machine learning is called image recognition or computer vision. It’s powered by deep learning algorithms and uses images as the input data. You have most likely seen this feat in action when you uploaded a photo on Facebook and the app suggested tagging your friends by recognizing their faces.

Customer relationship management (CRM) software

Machine learning enables CRM software applications to decode the “why” questions.

Why does a specific product outperform the rest? Why do customers make a particular action on the website? Why aren’t customers satisfied with a product?

By analyzing historical data collected by CRM applications, machine learning models can help build better sales strategies and even predict emerging market trends. ML can also find means to reduce churn rates, improve customer lifetime value, and help companies stay one step ahead.

Along with data analysis, marketing automation, and predictive analytics, machine learning grants companies the ability to be available 24/7 by its embodiment as chatbots.

Patient diagnosis

It’s safe to say that paper medical records are a thing of the past. A good number of hospitals and clinics have now adopted electronic health records (EHRs), making the storage of patient information more secure and efficient.

Since EHRs convert patient information to a digital format, the healthcare industry gets to implement machine learning and eradicate tedious processes. This also means that doctors can analyze patient data in real time and even predict the possibilities of disease outbreaks.

Along with enhancing medical diagnosis accuracy, machine learning algorithms can help doctors detect breast cancer and predict a disease’s progression rate.

Inventory optimization

If a specific material is stored in excess, it may not be used before it gets spoiled. On the other hand, if there’s a shortage, the supply chain will be affected. The key is to maintain inventory by considering the product demand.

The demand for a product can be predicted based on historical data. For example, ice cream is sold more frequently during the summer season (although not always and everywhere). However, numerous other factors affect the demand, including the day of the week, temperature, upcoming holidays, and more.

Computing such micro and macro factors is virtually impossible for humans. Not surprisingly, processing such massive volumes of data is a specialty of machine learning applications.

For instance, by leveraging The Weather Company’s enormous database, IBM Watson found that yogurt sales increase when the wind is above average, and autogas sales spike when the temperature is colder than average.

Additionally, self-driving cars, demand forecasting, speech recognition, recommendation systems, and anomaly detection wouldn’t have been possible without machine learning.

How to build a machine learning model?

Creating a machine learning model is just like developing a product. There’s ideation, validation, and testing phase, to name a few processes. Generally, building a machine learning model can be broken down into five steps.

Collecting and preparing training dataset

In the machine learning realm, nothing is more important than quality training data.

As mentioned earlier, the training dataset is a collection of data points. These data points help the model to understand how to tackle the problem it’s intended to solve. Typically, the training dataset contains images, text, video, or audio.

The training dataset is similar to a math textbook with example problems. The greater the number of examples, the better. Along with quantity, the dataset’s quality also matters as the model needs to be highly accurate. The training dataset must also reflect the real-world conditions in which the model will be used.

The training dataset can be fully labeled, unlabeled, or partially labeled. As mentioned earlier, this nature of the dataset is dependent on the machine learning method you choose.

Either way, the training dataset must be devoid of duplicate data. A high-quality dataset will undergo numerous stages of the cleaning process and contain all the essential attributes you want the model to learn.

Always keep this phrase in mind: garbage in, garbage out.

Choose an algorithm

An algorithm is a procedure or a method to solve a problem. In machine learning language, an algorithm is a procedure run on data to create a machine learning model. Linear regression, logistic regression, k-nearest neighbors (KNN), and Naive Bayes are a few of the popular machine learning algorithms.

Choosing an algorithm depends on the problem you intend to solve, the type of data (labeled or unlabeled), and the amount of data available.

If you’re using labeled data, you can consider the following algorithms:

- Decision trees

- Linear regression

- Logistic regression

- Support vector machine (SVM)

- Random forest

If you’re using unlabeled data, you can consider the following algorithms:

- K-means clustering algorithm

- Apriori algorithm

- Singular value decomposition

- Neural networks

Also, if you want to train the model to make predictions, choose supervised learning. If you wish to train the model to find patterns or split data into clusters, go for unsupervised learning.

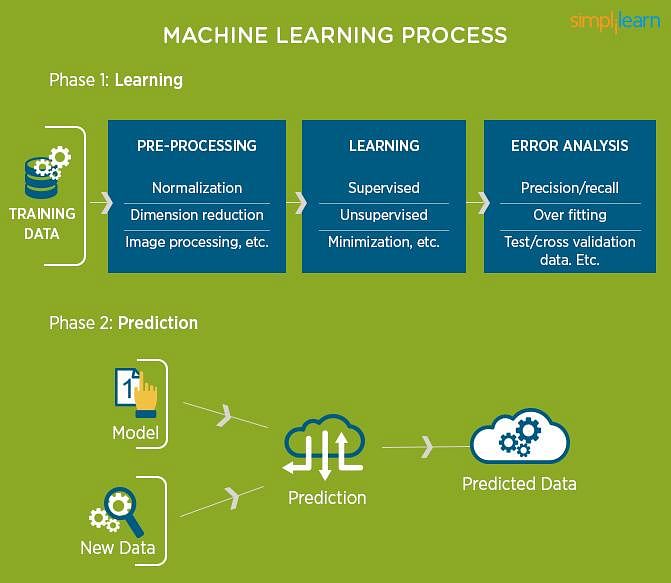

Train the algorithm

The algorithm goes through numerous iterations in this phase. After each iteration, the weights and biases within the algorithm are adjusted by comparing the output with the expected results. The process continues until the algorithm becomes accurate, which is the machine learning model.

Validate the model

For many, the validation dataset is synonymous with the test dataset. In short, it’s a dataset not used during the training phase and is introduced to the model for the first time. The validation dataset is critical for assessing the model’s accuracy and understanding whether it suffers from overfitting: an incorrect optimization of a model when it gets overly tuned to its training dataset.

If the model’s accuracy is less than or equal to 50%, it’s unlikely that it would be useful for real-world applications. Ideally, the model must have an accuracy of 90% or more.

Test the model

Once the model is trained and validated, it needs to be tested using real-world data to verify its accuracy. This step might make the data scientist sweat as the model will be tested on a larger dataset, unlike in the training or validation phase.

In a simpler sense, the testing phase lets you check how well the model has learned to perform the specific task. It’s also the phase where you can determine whether the model will work on a larger dataset.

The model gets better over time and with access to newer datasets. For example, your email inbox’s spam filter gets periodically better when you report particular messages as spam and false positives as not spam.

Top 5 machine learning tools

As mentioned earlier, machine learning algorithms are capable of making predictions or decisions based on data. These algorithms grant applications the ability to offer automation and AI features. Interestingly, the majority of end-users aren’t aware of the usage of machine learning algorithms in such intelligent applications.

To qualify for inclusion in the machine learning category, a product must:

- Offer a product or algorithm capable of learning and improving by leveraging data

- Be the source of intelligent learning abilities in software applications

- Be capable of utilizing data inputs from different data pools

- Have the ability to produce an output that solves a particular issue based on the learned data

* Below are the five leading machine learning software from G2’s Winter 2021 Grid® Report. Some reviews may be edited for clarity.

1. scikit-learn

scikit-learn is a machine learning library for the Python programming language that offers several supervised and unsupervised machine learning algorithms. It contains various statistical modeling and machine learning tools such as classification, regression, and clustering algorithms.

The library is designed to interoperate with the Python numerical and scientific libraries like NumPy and SciPy. scikit-learn can also be used for extracting features from text and images.

What users like:

“The best aspect of this framework is the availability of well-integrated algorithms within the Python development environment. It’s quite easy to install within most Python IDEs and relatively easy to use. Many tutorials are accessible online, making it easier to understand this library. It was clearly built with a software engineering mindset, and nevertheless, it’s very flexible for research ventures. Being built on top of multiple math-based and data libraries, scikit-learn allows seamless integration between them all.

Being able to use NumPy arrays and Pandas DataFrames within the scikit-learn environment removes the need for additional data transformation. That being said, one should definitely get familiar with this easy-to-use library if they plan on becoming a data-driven professional. You can build a simple machine learning model with just ten lines of code! With tons of features like model validation, data splitting for training/testing, and various others, scikit-learn’s open-source approach facilitates a manageable learning curve.”

– scikit-learn Review, Devwrat T.

What users dislike:

“It has great features. However, it has some drawbacks in dealing with categorical attributes. Otherwise, it’s a robust package. I don’t see any other drawbacks to using this package.”

– scikit-learn Review, User in Higher Education

2. Personalizer

Personalizer is a cloud-based service from Microsoft used to deliver personalized, relevant experiences to users. With the help of reinforcement learning, this easy-to-use API helps in improving digital store conversions.

After delivering content, the tool monitors users’ reactions, thereby learning in real time and making the best use of contextual information. Personalizer can be embedded into an app by adding just two lines of code, and it can start with no data.

What users like:

“The ease of us is absolutely wonderful. We got the configuration and our products recommended on our site in no time. After deployment, the app integration was so great that sometimes we forget it’s running in the background doing all the heavy work.”

– Personalizer Review, G2 User in Information Technology and Services

What users dislike:

“There is some lack of documentation online, but it isn’t really needed for the configuration.”

– Personalizer Review, G2 User in Financial Services

3. Google Cloud TPU

Google Cloud TPU is a custom-designed machine learning application-specific integrated circuit (ASIC) designed to run machine learning models with AI services on Google cloud. It offers more than 100 petaflops of performance in just a single pod, which is enough computational power for business and research needs.

What users like:

“I love the fact that we were able to build a state-of-the-art AI service geared towards network security thanks to the optimal running of the cutting-edge machine learning models. The power of Google Cloud TPU is of no match: up to 11.5 petaflops and 4 TB HBM. Best of all, the straight-forward easy to use Google Cloud Platform interface.”

– Google Cloud TPU Review, Isabelle F.

What users dislike:

“I wish there were integration with word processors.”

– Google Cloud TPU Review, Kevin C.

4. Amazon Personalize

Amazon Personalize is a machine learning service that enables developers to build applications with real-time personalized recommendations without any ML expertise. This ML service offers the necessary infrastructure and can be implemented in days. The service also manages the entire ML pipeline, including data processing and identifying the features, as well as training, optimizing, and hosting the models.

What users like:

“Amazon as a whole is usually two steps ahead. But Amazon Personalize takes it to a whole new level. It’s easy to use, perfect for small companies/entrepreneurs, and unique.”

– Amazon Personalize Review, Melissa B.

What users dislike:

“At this point, the only issue is that we have to filter through too many options so that our consumers are not constantly receiving repetitive recommendations.”

– Amazon Personalize Review, G2 User in Higher Education

5. machine-learning in Python

machine-learning in Python is a project that offers a web-interface and a programmatic-API for support vector machine (SVM) and support vector regression (SVR) machine learning algorithms.

What users like:

“Python is an easy to use machine learning programming language which has extensive libraries and packages. Its packages provide efficient visualization to understand. Also, nowadays, it’s used for purposes like automated scripting in cybersecurity.”

– machine-learning in Python Review, Manisha S.

What users dislike:

“Documentation for some functions is rather limited. Not every implemented algorithm is present. Most of the additional libraries are easy to install, but some can be quite cumbersome and take a while.”

– machine-learning in Python Review, G2 User in Higher Education

How machines learn the human world

Along with recommending the products and services you’re more likely to enjoy, machine learning algorithms act as a watchful protector that ensures you aren’t cheated by online fraudsters and keeps your email inbox clean of spam messages. In short, it’s a learning process that helps machines get to know the human world around them.

If machine learning hit the gym five days a week, we would get deep learning. It’s a subset of machine learning that mimics the functioning of the human brain. Read more about deep learning and why it’s crucial for creating robots with human-like intelligence.