Every week, the top AI labs globally — Google, Facebook, Microsoft, Apple, etc. — release tons of new research work, tools, datasets, models, libraries and frameworks in artificial intelligence (AI) and machine learning (ML).

Interestingly, they all seem to have picked a particular school of thought in deep learning. With time, this pattern is becoming more and more clear. For instance, Facebook AI Research (FAIR) has been championing self-supervised learning (SSL) for quite some time, alongside releasing relevant papers and tech related to computer vision, image, text, video, and audio understanding.

Even though many companies and research institutions seem to have their hands on every possible area within deep learning, a clear pattern is emerging. But, of course, all of them have their favourites. In this article, we will explore some of the recent work in their respective niche/popularised areas.

DeepMind

A subsidiary of Alphabet, DeepMind remains synonymous with reinforcement learning. From AlphaGo to MuZero and the recent AlphaFold, the company has been championing breakthroughs in reinforcement learning.

AlphaGo is a computer program to defeat a professional human Go player. It combines an advanced search tree with deep neural networks. These neural networks take a description of the Go board as input and process it through a number of different network layers containing millions of neuron-like connections. The way it works is — one neural network ‘policy network’ selects the next move to play, while the other neural network, called the ‘value network,’ predicts the winner of the game.

Taking the ideas one step further, MuZero matches the performance of AlphaZero on Go, chess and shogi, alongside mastering a range of visually complex Atari games, all without being told the rules of any game. Meanwhile, DeepMind’s AlphaFold, the latest proprietary algorithm, can predict the structure of proteins in a time-efficient way.

OpenAI

GPT-3 is one of the most talked-about transformer models globally. However, its creator OpenAI is not done yet. In a recent Q&A session, Sam Altman spoke about the soon to be launched language model GPT-4, which is expected to have 100 trillion parameters — 500x the size of GPT-3.

Besides GPT-4, Altman gave a sneak-peek into GPT-5 and said that it might pass the Turing test. Overall, OpenAI looks to achieve artificial general intelligence with its series of transformer models into new areas.

Today, GPT-3 competes with the likes of EleutherAI GPT-j, BAAI’s Wu Dao 2.0 and Google’s Switch Transformer, among others. Recently, OpenAI launched OpenAI Codex, an AI system that translates natural language into code. It is a descendant of GPT-3; its training data contains both natural language and billions of lines of source code from publicly available sources, including code in public GitHub repositories.

Facebook is ubiquitous to self-supervised learning techniques across domains via fundamental, open scientific research. It looks to improve image, text, audio and video understanding systems in its products. Like its pretrained language model XLM, self-supervised learning is accelerating important applications at Facebook today — like proactive detection of hate speech. Further, its XLM-R, a model that leverages RoBERTa architecture, improves hate speech classifiers in multiple languages across Instagram and Facebook.

Facebook believes that self-supervised learning is the right path to human-level intelligence. It accelerates research in this area by sharing its latest work publicly and publishing at top conferences, alongside organising workshops and releasing libraries. Some of its recent work in self-supervised learning include VICReg, Textless NLP, DINO, etc.

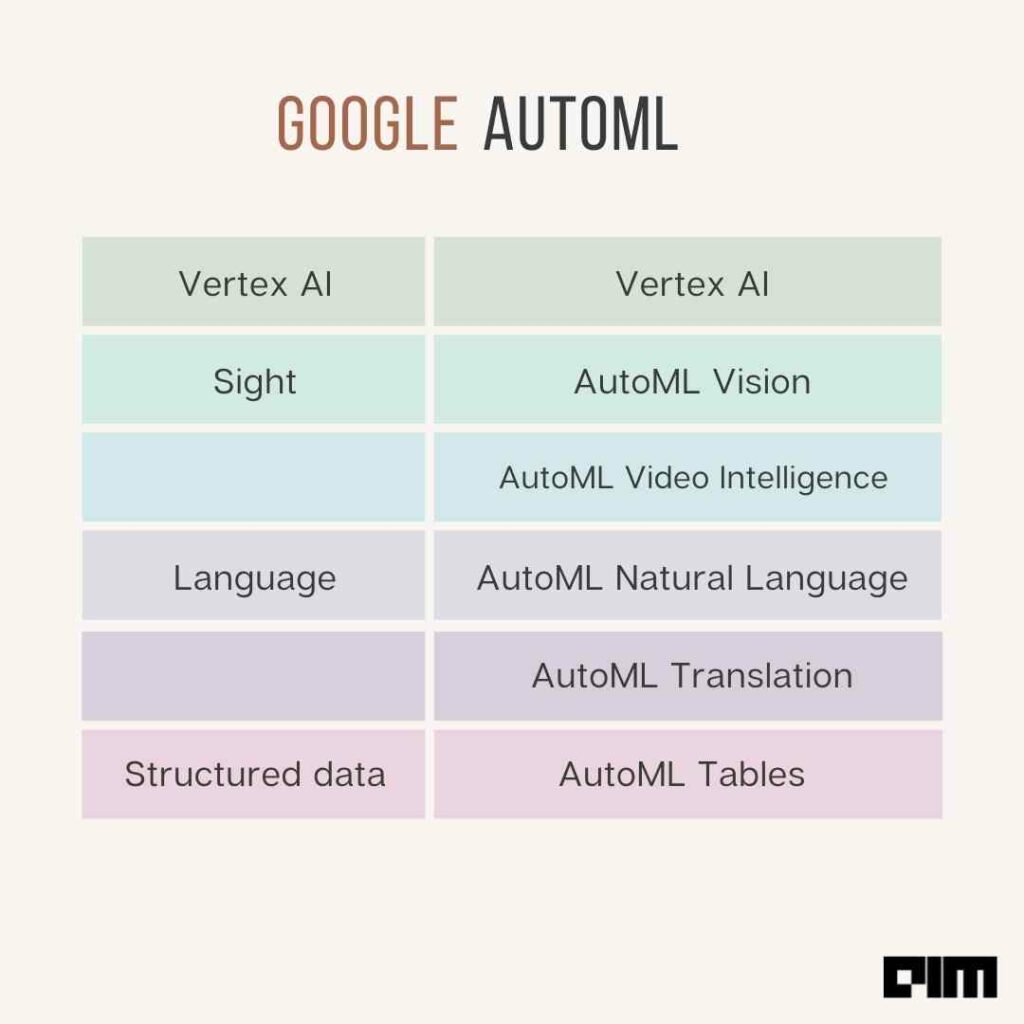

Google is one of the pioneers in automated machine learning (AutoML). It is advancing AutoML in highly diverse areas like time-series analysis and computer vision. Earlier this year, Google Brain researchers introduced a new way of programming AutoML based on symbolic programming called PyGlove. It is a general symbolic programming library for Python, used for implementing symbolic formulation of AutoML.

Some of its latest products in this area include Vertex AI, AutoML Video Intelligence, AutoML Natural Language, AutoML Translation, and AutoML Tables.

Apple

On-device machine learning comes with privacy challenges. To tackle the issue, Apple, in the last few years, has ventured into federated learning. For those unaware, federated learning is a decentralised form of machine learning. It was first introduced by Google researchers in 2016 in a paper titled, ‘Communication Efficient Learning of Deep Networks for Decentralized Data,’ but has been widely adopted by various players in the industry to ensure smooth training of machine learning models on edge, alongside maintaining the privacy and security of user data.

In 2019, Apple, in collaboration with Stanford University, released a research paper called ‘Protection Against Reconstruction and Its Applications in Private Federated Learning,” which showcased practicable approaches to large-scale locally private model training that were previously impossible. The research also touched upon theoretical and empirical ways to fit large-scale image classification and language models with little degradation in utility.

Check out other top research papers in federated learning here.

Apple designs all its products to protect user privacy and give them control of their data. Despite the setbacks, the tech giant is working on various innovative ways to offer privacy-focused products and apps by leveraging federated learning and decentralised alternative techniques.

Microsoft

Microsoft Research has become one of the number one AI labs globally, pioneering machine teaching research and technology in computer vision and speech analysis. It offers resources across the spectrum, including intelligence, systems, theory and other sciences.

Under intelligence, it covers research areas like artificial intelligence, computer vision, search and information retrieval, among others. In the systems, the team offers resources in quantum computing, data platforms and analytics, security, privacy and cryptography, and more. Currently, it has become a go-to platform for attending lecture series, sessions and workshops.

Earlier, Microsoft launched free machine learning for beginners to teach students the basics of machine learning. For this, Azure Cloud advocates and Microsoft student ambassador authors, contributors, and reviewers put together the lesson plan that uses pre-and-post lesson quizzes, infographics, sketch notes, and assignments to help students adhere to machine learning skills.

Amazon

Amazon has become one of the leading research hubs for transfer learning methods due to its exceptional work in the Alexa digital assistant. Since then, it has been pushing research in the transfer learning space incredibly, be it to transfer knowledge across different language models, techniques, or better machine translation.

There have been several research works implemented by Amazon, especially in transfer learning. For example, in January this year, Amazon researchers proposed ProtoDA, an efficient transfer learning for few-shot intent classification.

Check out more resources related to transfer learning from Amazon here.

IBM

While IBM pioneered technology in many machine learning areas, it lost a leadership position to other tech companies. For example, in the 1950s, Arthur Samuel of IBM developed a computer programme for playing checkers. Cut to the 2020s, IBM is pushing its research boundaries in quantum machine learning.

The company is now pioneering specialised hardware and building libraries of circuits to empower researchers, developers and businesses to tap into quantum as a service through the cloud, using preferred coding language and without the knowledge of quantum computing.

By 2023, IBM looks to offer entire families of pre-built runtimes across domains, callable from a cloud-based API, using various common development frameworks. It believes that it has already laid the foundations with quantum kernel and algorithm developers, which will help enterprise developers explore quantum computing models independently without having to think about quantum physics.

In other words, developers will have the freedom to enrich systems built in any cloud-native hybrid runtime, language, and programming framework or integrate quantum components simply into any business workflow.

Wrapping up

The article paints a bigger picture of where the research efforts of the big AI labs are heading. Overall, the research work in deep learning seems to be going in the right direction. Hopefully, the AI industry gets to reap the benefits sooner than later.