Abstract

For several industries, the traditional manufacturing processes are time-consuming and uneconomical due to the absence of the right tool to produce the products. In a couple of years, machine learning (ML) algorithms have become more prevalent in manufacturing to develop items and products with reduced labor cost, time, and effort. Digitalization with cutting-edge manufacturing methods and massive data availability have further boosted the necessity and interest in integrating ML and optimization techniques to enhance product quality. ML integrated manufacturing methods increase acceptance of new approaches, save time, energy, and resources, and avoid waste. ML integrated assembly processes help creating what is known as smart manufacturing, where technology automatically adjusts any errors in real-time to prevent any spillage. Though manufacturing sectors use different techniques and tools for computing, recent methods such as the ML and data mining techniques are instrumental in solving challenging industrial and research problems. Therefore, this paper discusses the current state of ML technique, focusing on modern manufacturing methods i.e., additive manufacturing. The various categories especially focus on design, processes and production control of additive manufacturing are described in the form of state of the art review.

Introduction

Ever since the evolution of humankind, technology has also undergone evolving at its own pace. Man used stones to light up the fire to make up for the absence of the mighty sun. Now the technology has evolved even to replicate the sun’s power itself (Sivaram, 2018). Such technologies have revolutionized the entire world. Starting with the steam engines in the eighteenth century, the industries worldwide have undergone a substantial self- up-gradation. The nineteenth century witnessed a revolution because of one of humankind’s best inventions, the electricity. With this invention, factories are better equipped with self-operating mechanisms, auto-driving motors, etc. This revolution ignited the spark for the next, which occurred very soon in the late 1960s because of the invention of computers. Later on, it was universally recognized as the 3rd revolution of the industries (Ali et al., 2021; Hudson, 1982; Singh et al., 2013). The concept of automation began in this revolution which immensely helped mass production (David, 2017; Dunk, 1992; Kuric et al., 2018; Wu et al., 2015a). With the innovation of computers, several manufacturing concepts were progressed, cumulating the production effectiveness (Azzone & Bertele, 2007; Clegg et al., 2010; Gorecky et al., 2014; Hudson, 1982; Singh et al., 2013; Wagner et al., 2008).

It’s almost 50 years since the 3rd industrial revolution, and the manufacturing sectors worldwide are preparing for the next. It is the 4th Industrial revolution, a modern gear driven by the internet and the use of computers (Frank et al., 2019; Hofmann & Rüsch, 2017; Vaidya et al., 2018). In industry 4.0, the advantages over 3.0 were summed, and the computers were connected to facilitate mutual communication with the provision to take decisions without human participation (Dalenogare et al., 2018; Frank et al., 2019; Ghobakhloo, 2020). Cutting-edge technologies like cyber-physical systems, the Internet of Things (IoT), and the Internet of Systems are the major cause for Industry 4.0. The concept of the intelligent factory has now become a reality (Dalenogare et al., 2018; Gorecky et al., 2014). Due to artificial intelligence and easy access to more data, smart machines are getting smarter day by day. This helps industries turn out to be more well-organized, efficient, and optimized (Ghobakhloo, 2020; Gorecky et al., 2014). Ultimately, it’s machines network, which are digitally connected for sharing the information that results in the true power of Industry (Gorecky et al., 2014; Vaidya et al., 2018; Wang et al., 2015). Nascent innovation in technology is advancing rapidly, with an ultimate aim to help humanity in all possible ways. Industrial processes are also advancing in parallel with the advent of new technologies. Thus all the manufacturing sectors must implement Industry 4.0 technologies such as cyber-physical systems (CSP), big data analytics, 3-D printing, IoT, Artificial Intelligence (AI), Additive Manufacturing (AM) (Guo et al., 2020; Zhou et al., 2021), and Machine Learning (ML), etc., All these techniques held the boundless potential for sustainable manufacturing (Chu et al., 2014; Jin et al., 2020; Kumar & Kishor, 2021; Paturi & Cheruku, 2021; Tizghadam et al., 2019).

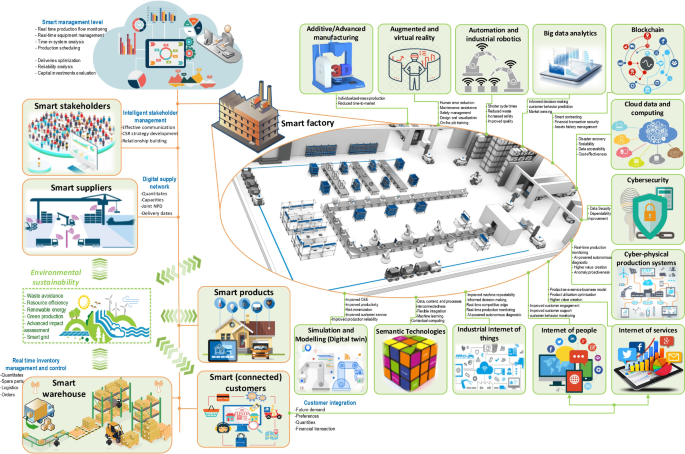

Industry 4.0 is a transformation of the manufacturing factories that makes it possible to gather and analyse data across machines, enabling faster, more flexible, and more efficient processes to produce higher-quality goods at reduced budgets (Chu et al., 2014; Gorecky et al., 2014; Jin et al., 2020; Kumar & Kishor, 2021; Paturi & Cheruku, 2021; Tizghadam et al., 2019; Vaidya et al., 2018). This new revolution of the twenty-first century will increase productivity, shift economics, enrich industrial growth, and modify the workforce profile (Gorecky et al., 2014; Vaidya et al., 2018; Wang et al., 2015). Figure 1 explains the digitization process of Industry 4.0, including the functional domains of its major components. The various latest technologies are involved in implementing and enabling digitization. Some of these technologies are robots in industry, industrial automation, cloud computing, internet of things and services and AI are highly used in industrial applications. Most of these technologies have completed their saturation stages in terms of integration in digitization (Nascimento et al., 2018; Yin et al., 2017).

The term Industry 4.0 collectively refers to a wide range of current concepts, whose clear classification concerning a discipline as well as their precise distinction is not possible in individual cases. In the following fundamental concepts are listed:

- Smart Factory: Manufacturing will completely be equipped with sensors, actors, and autonomous systems. By using “smart technology” related to holistically digitalized models of products and factories (digital factory) and an application of various technologies of Ubiquitous Computing, so-called “Smart Factories” develop which are autonomously controlled (Lucke et al., 2008).

- Cyber-physical Systems: The physical and the digital level merge. If this covers the level of production as well as that of the products, systems emerge whose physical and digital representation cannot be differentiated in a reasonable way anymore. An example can be observed in the area of preventive maintenance: Process parameters (stress, productive time etc.) of mechanical components underlying a (physical) wear and tear are recorded digitally. The real condition of the system results from the physical object and its digital process parameters.

- Self-organization: Existing manufacturing systems are becoming increasingly decentralized. This comes along with a decomposition of classic production hierarchy and a change towards decentralized self-organization.

- New systems in distribution and procurement: Distribution and procurement will increasingly be individualized. Connected processes will be handled by using various different channels.

- New systems in the development of products and services: Product and service development will be individualized. In this context, approaches of open innovation and product intelligence as well as product memory are of outstanding importance.

- Adaptation to human needs: New manufacturing systems should be designed to follow human needs instead of the reverse.

- Corporate Social Responsibility: Sustainability and resource-efficiency are increasingly in the focus of the design of industrial manufacturing processes. These factors are fundamental framework conditions for succeeding products.

“Industry 4.0” describes different – primarily IT driven – changes in manufacturing systems. These developments do not only have technological but furthermore versatile organizational implications. As a result, a change from product- to service-orientation even in traditional industries, is expected. Second, an appearance of new types of enterprises can be anticipated which adopt new specific roles within the manufacturing process resp. the value-creation networks. For instance, it is possible that, comparable to brokers and clearing-points in the branch of financial services, analog types of enterprises will also appear within the industry.

With the planning, analysis, modeling, design, implementation and maintenance (in short: the development) of such highly complex, dynamic, and integrated information systems, an attractive and at the same time challenging task for the academic discipline of business and information systems engineering BISE arises, which can secure and further develop the competitiveness of industrial enterprises.

Zhou et al. (2020) proposed a PD-type iterative learning algorithm to identify a category of distinct spatially unified systems with unstructured indecision. As per stability theory of repetitive process, suitable measures for system’s steadiness are provided as linear matrix inequalities (LMIs). To end, efficacy of modelled algorithm is tested using simulation of ladder circuits. Xin et al. (2022) suggested a novel online integral reinforcement learning algorithm for resolving mutiplayer non-zero sum games. They proved the convergence of the iterative algorithm and the results showed the effectiveness and feasibility of this design method. Stojanovic et al. (2020) projected two classes of approaches for estimating the joint parameter-state robustness of linear stochastic models, collectively having major faults and non-Gaussian noises which was duly supported by the experimental results.

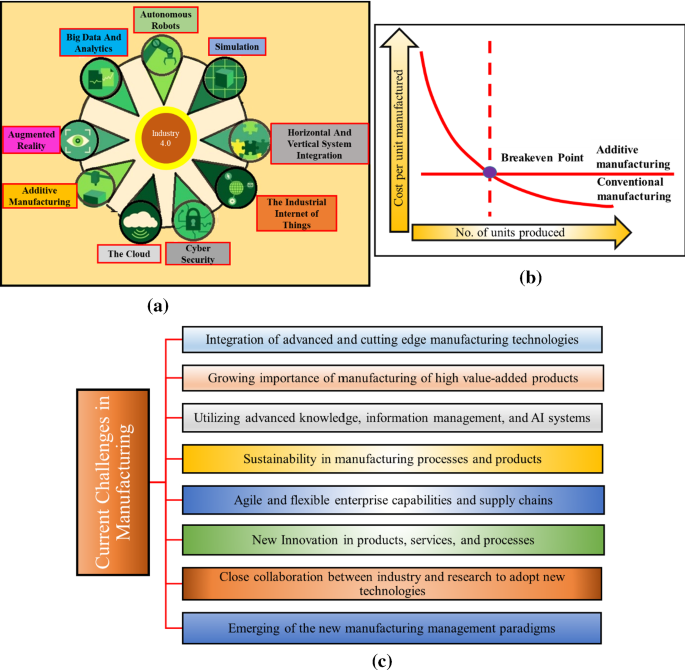

The concept of industry 4.0 can be made possible only through nine new technologies, as mentioned in Fig. 2a. These, when interlinked together, can standardize the conventional process to the level of industry 4.0. Mass customization and personalization are the two influential features in implementing Industry 4.0 guidelines. AM, along with the AI & ML methods, has excellent potential for final product personalization. Yet, there are a few difficulties with AM implementation in mass production. For example, the cost associated with AM implementation is significantly huge after a certain point compared to the conventional machining process (Fig. 2b).

Besides, there exists some hesitance among the industries for AM implementation because of its inability to produce large-sized components of desired shape and strength. Much research has been oriented to tackle various issues facing implementing the AM techniques directly for mass production; however, it is still under exploration. The concepts of Industry 4.0 will be of a significant boost to all the manufacturing industries, especially AM and ML-based, to eradicate the difficulties in implementing the new technologies. The combined effect and advantage of these technologies can yield success in multiple manufacturing domains. Though all these technologies are interdependent, they can handle the situation independently and increase the profit margin of the manufacturing. Though it is in practice in some multi-national companies, small-scale industries and SMEs still are far from implementing Industry 4.0 concepts. The foremost challenge lies in the installation cost and the knowledge to work with, which is hard to accommodate for low scale production units. The manufacturing people firmly believe that industry 4.0 will rule all the sectors in this century irrespective of the prevailing situation; therefore, large scale and multinational, national companies have shown massive interest to implement the same with no time loss.

Motivation and background

The central focus of this article is the estimation of the level of interest and efforts required in incorporating ML techniques into the present manufacturing industries. Furthermore, by identifying subpopulations of related and relevant literature, this work aims to identify critical areas of the ML technologies application. Also, the paper identifies the relevant gaps in the deployment of ML techniques which are presented as future research scopes. The technology available now enables us to design and develop products as per the industrial needs. With the advent of digital media, it has become a piece of cake to search and download the research literature, which was quite cumbersome in manual methods (Chonde, 2016). Various search engines and repositories such as google scholar and ScienceDirect are available to receive detailed information by entering the keywords of simple phrases. These customized searches are relied on the input words and do not fetch any underlying mechanisms or concepts involved. In this review work, Latent Semantic Analysis (LSA) was used to develop the relation between sentences and documents (Dumais, 2004). This emphasizes not just simple word matching as with traditional searches but core concept justification.

The enormous application domains and ease of invention in ML techniques, which were once considered not appropriable or feasible, have permitted their enhanced integration and realization with the modern cutting-edge epoch. With their spread in manufacturing, industries also welcomed ML to gain economic benefits. Presently, the manufacturing sector is undergoing major changes due to great demand to implement intelligent Manufacturing and Industry 4.0. However, still, there is major hesitation from several sectors, especially mid and lower cap, due to fear of cost or training incurred and their implementation. Thus, another motivation of this article is to summarize the decade’s publications to facilitate the quantification of efforts added forward for integrating intelligent techniques such as ML in manufacturing. Major ML application areas and popular ML algorithms are detailed in different sections, also highlighting ML’s limitations.

A critical examination of focus areas, and major gaps (what advancement or research and development can be proposed) should be taken into account so that literature/research gaps can be filled and ML can have its widespread application not only in manufacturing but in other domains also. Efficient integration of ML is also possible when its algorithm is judged based on real-life issues which are challenging, time-consuming, and expensive to tackle otherwise. In order to cater above-mentioned motivations, a detailed literature analysis was made with a major focus on presenting recent state of the art for ML algorithms and analyzing the cross-domain possibilities for ML product life cycle.

Typically three motivational paradigms are taken:

- Which ML algorithm is selected and on what parameters?

- ML method’s application frequency on a manufacturing process and its level?

- Possible shortcomings which remain untouched, and what advancement can be made/suggested for ML’s better integration with its cross-examination?

Intelligence is a primary need for learning, which is an essential feature of smart manufacturing. The ML algorithms are evident to have an optimum solution that is dependent on the variable-derived necessities. Major hurdles of ML integration in manufacturing have been/are on the verge of being overcome; however, their optimum association is still remained to work out. This paper also covers major ML techniques implementable to manufacturing, including their strengths and limitations. A close tuning of ML’s with the possible product type and its future perspective should be addressed before making any judgment. Still, there are several issues left unsolved that should be taken care of before implementing ML.

Thus this article is framed to address the potential domains of ML in AM processes including, the need and challenges associated with the AM, ML and its algorithms, their applications and limitations, ML in design of AM processes (topological and material design), ML for AM processes (process parameter optimization, process monitoring, defects assessment, quality prediction, close loop control, geometric deviation control and cost estimation), and ML for AM production (planning, quality control, data security, dimensional deviation management).

Challenges of the manufacturing domain

Manufacturing industries are evolved over the centuries, and it is an integral part of a country’s economy. Few countries with a well-matured economy experienced reduced contribution toward their GDP through the manufacturing process over the last decades. We can observe that efforts are constantly put to revamp the manufacturing sectors and boost the economy. In 2014, the US started an initiative ‘Executive Actions to Strengthen Advanced Manufacturing in America’ to improve the country’s employment rate and support the manufacturing factories (Anderson, 2011). The European Union came up with ‘Factories of the Future’ program to identify industries of the future and nurture them in an optimistic way (Mavrikios et al., 2011). The manufacturing sectors face various problems nowadays compared to the past, wheter it is welding, 3D printing, forming and casting (Kumar, 2016; Kumar & Wu, 2018, 2021a; Kumar et al., 2017, 2019, 2020a). Environmental pollution, financial crisis, and geopolitical factors are being taken very seriously. At present, multiple research reports are available in open literature addressing the major challenges of the manufacturing sector to advance in global standards. The conclusion of a few is given in Fig. 2c. The above-discussed challenges explain the ongoing trend of the manufacturing factories. These challenges make the business complicated and fragile. The obvious complication is deeply inborn in industrial process itself and the company’s business processes (Wiendahl & Scholtissek, 1994). The dynamic business scenario of manufacturing industries is prominently influenced by several uncertainties that complicate existing factors (Monostori, 2003). To overcome all these complexities, sophisticated techniques like ML and AI can be brought into the context. These techniques, which are completely driven by the data sources, can resolve key industrial issues. They may find extremely intelligent and non-linear data and transform it into a complex model that can be utilized for predictions, recognition, categorization, analysis, and projection by transforming raw input.

Uncertainity in AM

One of the major barriers that hinder the realization of the significant potential of AM techniques is the variation in the quality of the manufactured parts. Uncertainty quantification (UQ) and un- certainty management (UM) can resolve this challenge based on the modeling and simulation of the AM process. From powder bed forming to melting and solidification, various sources of uncertainty are involved in the processes. These sources of uncertainty result in variability in the quality of the manufactured component. The quality variation hinders consistent manufacturing of products with guaranteed high quality. This becomes a major hurdle for the wide application of AM techniques, especially in the manufacturing of metal components. To achieve the quality control of the AM process, a good understanding of the uncertainty sources in each step of the AM process and their effects on product quality is needed. Uncertainty quantification (UQ) is a process of investigating the effects of uncertainty sources (aleatory and epistemic) on the quantities of interest (QoIs) (Hu & Mahadevan, 2017; Hu et al., 2016). Even though UQ for models of physical hardware has been intensively studied during the past decades and continue to address important research questions, UQ in AM is still at its early stage. Only a few examples have been reported in the literature (Lopez et al., 2016). In addition, currently reported UQ methods for AM are mainly based on experiments and are performed at the process level. This will result in excessive material wastage, increased product development cost, and delay in the product development process (Hu & Mahadevan, 2017) because UQ usually requires numerous experiments and process optimization and UQ are implemented in a double loop framework (i.e., UQ needs to be performed repeatedly when the process is changed). To ensure the best quality of AM product, UQ and UM have gained increasing attention in recent years. Current research efforts in UQ and UM of AM processes can be roughly classified into three groups: (1) UQ of AM using experiments, (2) UQ of melting pool model, and (3) UQ of solidification (microstructure) model.

Experiment-based UQ of AM process

For UQ at the process level, AM experiments are performed repeatedly at different process parameter settings. Based on the data of QoIs collected at different input settings from physical experiments, the effects of process parameters on the quality of manufactured products are analyzed using statistical analysis (Kamath, 2016). For instance, Delgado et al. (2012) used analysis of variance (ANOVA) to evaluate the effects of scan speed, layer thickness and building orientation on dimensional error and surface roughness. Raghunath and Pandey (Raghunath & Pandey, 2007) investigated the influence of process variables such as laser power, beam speed, hatch spacing, and scan length on the shrinkage of the product using signal-to-noise (S/N) ratio and ANOVA methods. By identifying the factors that have the most significant effects on the variation of product quality, the quality of AM can be improved by implementing quality control on the influential factors. The Taguchi method has also been employed to design experiments for the uncertainty analysis of AM (Garg et al., 2014; Raghunath & Pandey, 2007).

UQ of melting pool

As one of the most important models in the AM process, UQ of melting pool is of great interest to the researchers. For example, Schaaf performed uncertainty and sensitivity analysis for the melting pool model to identify the most sensitive parameters in the model (Schaaf, 1999). Anderson proposed to use DAKOTA (Swiler et al., 2017) and ALE3D software to explore UQ of the melting process (Anderson & Delplanque, 2015). Most recently, Lopez et al. (2016) performed UQ for the metal melting pool model based on a thermal model developed by Devesse et al. (2014). They identified four sources of uncertainty in the melting pool model, namely model assumptions, unknown simulation parameters, numerical approximations, and measurement error (Adamczak et al., 2014). In order to reduce the uncertainty, they incorporated the online measurement data into the model. Based on these efforts, the effects of uncertain parameters on the shape of the melting pool are studied.

UQ of solidification

Along with the research efforts in UQ of the melting pool model, efforts have also been devoted to UQ of the solidification model in recent years. Ma et al. (2015) used design of experiments and FE models to identify the critical variables in laser powder bed fusion. Loughnane (Loughnane, 2015) has developed a UQ framework for microstructure characterization in AM. This framework accounts for sources of characterization errors, which are modeled using phantoms. Based on the modeling of error sources, effects of the errors on the microstructure statistics are analyzed. Statistical analysis and virtual modeling tools are also developed for the analysis of the microstructure. Park et al. (2014) used a homogenization method to investigate the effect of microstructure on the mechanical properties in the macro-model. Cai and Mahadevan (2016) studied the effect of cooling rate on the microstructure and considered various sources of uncertainty during the process of solidification. The above literature review indicates that UQ and UM of AM process are still at its early stages.

ML in manufacturing

The manufacturing industry produces a vast amount of data every day (Chand & Davis, 2010). These data compromise various formats, for example Monitoring information from the manufacturing line, meteorological specifications, process performance, machining time, and machine tool settings, to name a few examples (Davis et al., 2015). Different countries have used unlike names for this process; for example, Germany uses Industry 4.0, the USA uses Smart Manufacturing, while in South Korea, it is known as smart Factory. The vast amount of research publications increases the massive amount of data, sometimes called Big Data (Lee et al., 2013). Such data helps to improve the process performance by giving active feedback to the machine. The extracted useful information from the Big Data helps to expand the process and product quality sustainably (Elangovan et al., 2015). However, the negative impact of such a huge amount of data will confuse or lead to a false conclusion. If the system used to manage such massive data is well-established, it is always a boon to the manufacturing industries. It can also be noted that the availability of such a reliable data system helps improve the process quality, cost reduction, understanding of the customers’ expectations, and analysing business complexity and dynamics involved (Davis et al., 2015; Loyer et al., 2016).

Industrial production, engineering services, materials and processes, environmental simulation, aerospace, computers and privacy, nuclear physics, thermal engineering, electronics and communication, automotive industry, chemical engineering, ergonomics, bio-medical, pharmaceutics, and commercial are some of the fields where ML has attracted many researchers and investigators from around the world (Fahle et al., 2020; Jin et al., 2020; Rai et al., 2021; Sharp et al., 2018; Wang et al., 2020; Wuest et al., 2016). On top of all, ML in manufacturing is an area where numerous research works are carried out. ML techniques are used for design, management, scheduling, material resource planning, capacity analysis, quality control (Peres et al., 2019), maintenance, and automation, etc. (Fahle et al., 2020; Jin et al., 2020; Rai et al., 2021; Sharp et al., 2018; Wang et al., 2020; Wuest et al., 2016). Rolf et al. (2020) used the genetic algorithm (GA) for solving a hybrid flow scheduling program. The developed model gave improved results than the procedure adopted in the industries. Using support vector regression (SVR), Ahmed et al. (2020) estimated the splicing intensity of an unrestrained beam sample. This was one of the first ML studies in architecture and civil materials management. The possibilities of employing the Naïve Bayes classification approach for deterioration detection in construction were investigated by Addin et al. (2007). They demonstrated that machine learning might be used in material science and design. Peters et al. (2007) used the random forest algorithm to model the distribution of Eco hydrology in ecological modelling. In ergonomics, the logistic regression technique was used by McFadden (1997) to forecast the pilot-error incidences of US airline pilots. Dubey et al. (2015) demonstrated the ANN technique’s application to find the power delivery in pressurized heavy water reactors in nuclear engineering. The above-discussed techniques were also explored in various fields of engineering, which opened the path to significant developments to solve complex real-time problems (Alade et al., 2020; Ali et al., 2021; Calvé & Savoy, 2000; Hapfelmeier & Ulm, 2014; Piro et al., 2012; Ramachandran et al., 2020; Seibi & Al-Alawi, 1997).

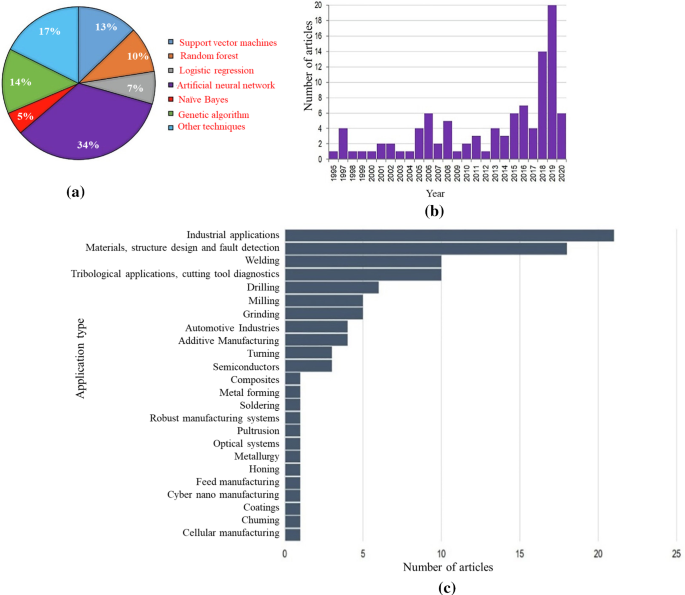

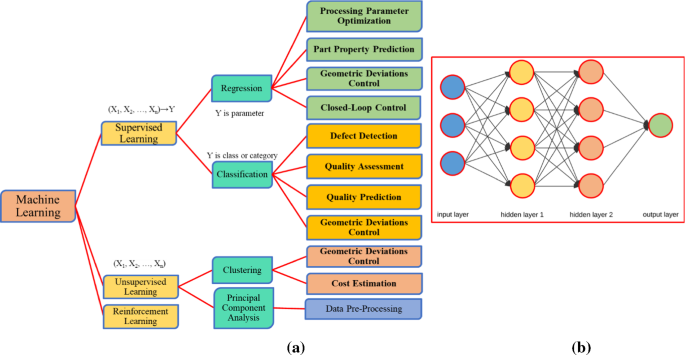

Typically, the two most important aims for any industrial company are product performance and volume. To sustain healthy competition among the competitors, a firm should produce sophisticated quality products on a mass scale. Therefore, research of computing techniques such as ML and AI in the manufacturing domains has become very significant in a couple of years. For the past 50 years, AI has helped to improve the process by constantly developing ML models (Zhang & Huang, 1995). Manav et al. (2018) solved turning process optimization using the GA model. Weiwen et al. (2018) developed an SVR model for sensing the breakouts for high-speed small hole drilling EDM. Sukumar et al. (2014) developed the ANN model to optimize the process conditions for face milling of AI alloy. Yi et al. (2020) employed the random forest algorithm for performance evaluation in the fused deposition modeling process. In a similar context, Imran et al. (2017) employed genetic algorithm techniques for the cellular manufacturing systems. Similarly, massive research work has been exercised in past few decades to improve process and cost reduction in the manufacturing industries (D’Addona & Antonelli, 2019; Knoll et al., 2019; Kreutz et al., 2019; Schreiber et al., 2019; Wu et al., 2017). Because each algorithm has advantages and disadvantages, the model’s performance is heavily reliant on the data used to construct the model. Singh et al. (2013) created an SVR method to assess layer stresses during the hydro-mechanical deep design method. They examined the efficiency of the procedure using the ANN algorithm with the finite element approach. Niu et al. (2017) created an SVR method to assess layer stresses during the hydro—mechanical deep design method. They examined the efficiency of the procedure using the ANN algorithm with the finite element approach. Niu et al. (2017) conducted comparison research to assess the emission characteristics of a CRDI-assisted marine diesel engine using ANN and support vector machines (SVM-Regression). Similar comparison studies have been done in different fields of the manufacturing industries (Acayaba & Escalona, 2015; Çaydaş & Ekici, 2012; Gokulachandran & Mohandas, 2015; Jurkovic et al., 2018; Tian & Luo, 2020). A comprehensive comparison is made by Paturi et al. (2021) for ML applicability in different industries. The summary of their study is shown in Fig. 3a-c.

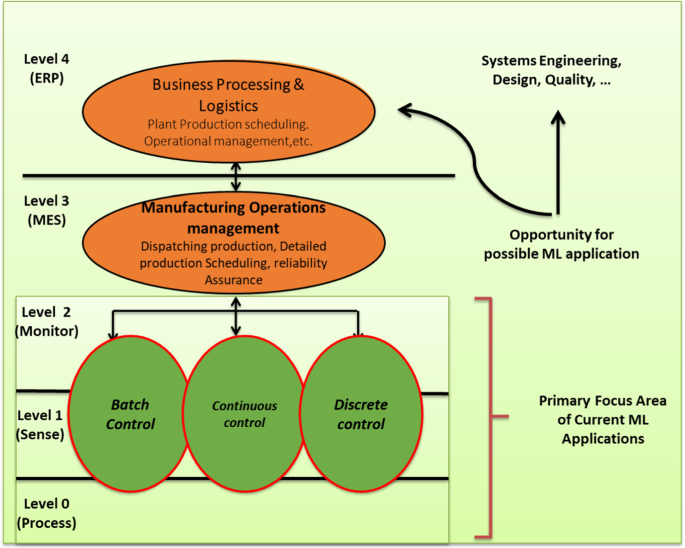

Though Industrial application tops the chart, ML techniques are widely used in significant manufacturing processes like welding, grinding, and AM (Jia et al., 2021). ML in AM is one of the attractive research in AM as it forms the stepping stone for the next industrial revolution. The range and architecture of the ISA-95 framework are highlighted in Fig. 4, which also outlines key application sectors where ML concepts might be inherited in production and other sectors. ML has found its surge of application in manufacturing twice. The first time occurred in the early 1980s when the computer and the internet were invented. Because of some practical difficulty, ML could not deliver its full potential in problem-solving, because of which the industrial adoption of this technology was significantly poor. But after the invention of coding platforms with improved user interface and well-advanced computer processors, ML has once again started playing a significant role in almost all industries (Thoben et al., 2017). The focus of major industries and the research group is mainly on developing domain-specific ML models based on references from past works. It has been postulated that the cross-domain models pulled very little attention from ML models. This would be a handy tool to connect and interpret the data throughout the life cycle of any product.

ML platform has remained separated throughout the product life cycle, including conceptualization, planning, fabrication, inspection, and maintenance. A huge junk of data is being generated every day with increased application and adoption of modern manufacturing concepts such as the Industrial (IoT), a subsystem of Industry 4.0, and intelligent manufacturing. The question that remains unanswered is how well such data can be connected to developing an ML system for manufacturing. In applications such as Total Conceptual models, Design for Lean Thinking, and Design for Manufacturing, understanding the numerous product life cycle stages is critical (Garbie, 2013; Silva et al., 2014). All types of cost growth are mostly the result of decision-making. It was discovered that lowering the negative impacts of decisions earlier in the lifecycle could benefit a manufacturing system’s price and performance. For these kinds of decision-making, the production needs a thorough grasp of the entire lifespan and how one action affects the other.

Several ML models are developed to tackle massive data (Multivariate Statistical Methods in Quality Management xxxx). However, factors like probable over-fitting must be considered in the implementation process (Widodo & Yang, 2007). Several options are available for reducing dimensions if it ascertains to be an issue, even though it is improbable because of the influence of the algorithms used (Pham & Afify, 2005). Using ML in manufacturing can be vital in extracting outlines from available data that can estimate the possible output (Nilsson & Nilsson, 1996). This new technique could help process owners make better decisions or automatically improve the system for a better marginal profit in the business. Lastly, the objective of specific ML algorithms is to find patterns or regularities that explain relationships between the various causative parameters involved in the process. Due to the challenges of a quickly changing, complex manufacturing setting, machine learning (ML) as part of AI has the ability to understand and evolve. Hence, the developer has the freedom of analyzing without expecting the consequences of the situation. As a result, ML makes a compelling case for its implementation in manufacturing compared to other prevailing models. A significant power of ML models is that it automatically learns from and adapt to changing situations (Lu, 1990).

A major challenge of ML integration with manufacturing processes is how to collect the appropriate data? It poses a major limitation as data’s quality, quantity and composition are variable and depends on the end-term requirement. Thus type of data available to feed the algorithms is crucial and casts a major influence on output performance. Several times, high dimensional data may contain huge immaterial and obsolete information, which can widely impact the performance of the ML’s algorithm used (Yu & Liu, 2003). In the present era, major ML techniques process the data containing continuous and nominal figures. The degree of influence is primarily based on the multiple variables, including the algorithm as well as mode of variables and their types. Hence it can be regarded as major challenge for several researchers in the context of ML in manufacturing. Multiple times the security concerns and industrial competition also restrict to gathering of the required quality data and hamper the process of ML integration. It is important to note that in multiple instances, compared to conventional methods, ML permits better analysis of the information feed with it with considerably low need of raw data availability; still, its successful integration with the system needs special attention. As mentioned by Hoffmann (Hoffmann, 1990), ML requires a lot of time to collect the data, while in conventional methods, numerous effort is vested in extracting the useful information from raw data. After data is collected, its preprocessing is crucial as output will be largely impacted based on which preprocessing algorithm is chosen. It may cause a challenge for the training of set of algorithms used. It is pretty common in various manufacturing processes that some atrributes or their values are missing or absent from the master data sheet. Thus the absent values cause major hurdles for successful and reliable integration of ML in manufacting.

Another important challenge with ML integration is choosing suitable techniques or algorithms for a particular problem. Though there are multiple sets of ‘general ML techniques, specialized problems need unique methods to tackle the issue and may have their own pros and cons (Hoffmann, 1990).

As of now, a few common approaches are in trend for choosing an appropriate ML algorithm for common problems, which are as follow:

- First is to determine the data availability and its origin of source (reliable data sources are preferred). Next is how it is framed, whenever it is labelled or unlabelled to making a choice between a supervised, unsupervised, or RL approach.

- Second is, suitability of the ML algorithm with the problem type and its definition. Special attention should be made on data structure and its quantity required for training and assessment.

- Third is, past history of the ML algorithms applied under similar condition to check their response time and output. The research problem where it is applicable should have similar background and applicability.

Next critical challenge for successful ML integration is “data interpretation”. There are multiple variables, that should be taken into to account for reliable data analysis, for example, format of output, type of algorithm, variables setting, intended outcome, data, and its’ preprocessing. With the outcomes analysis, certain different boundaries might have more influence.

Common ML algorithms applicable to manufacturing application

The prevalence of machine learning has been increasing tremendously in recent years due to the high demand and advancements in technology. The potential of machine learning to create value out of data has made it appealing for businesses in many different industries. Most machine learning products are designed and implemented with off-the-shelf machine learning algorithms with some tuning and minor changes.

There is a wide variety of machine learning algorithms that can be grouped in three main categories:

Supervised machine learning

Supervised learning (SL) permits a software application to learn a training data set to correctly identify unlabelled data in the test set (Learned-Miller, 2014). The ML model adjusts the weightage of the fed input variables until a proper fit of the data is attained. SL algorithms can solve almost all the numerical problems in engineering. In SL, every input data is labeled with an output Y, and the training dataset contains numerous input–output combinations. Every input, such as X1; X2;…;Xn, is a vector that contains all of the attributes that may impact the performance. Every output can be an objective classification (good or unsatisfactory), classification as the ML category, or objective parameter (porosity and strength), and regression as the ML class. The datasets can take many forms, including photos, audio samples, and text. An objective function, called the cost function, is used to calculate the error among the predicted and actual output values. The trial phase can yield an unbiased valuation of the correctness of the model with previously unseen extra information, known as a test set.

At present multiple SL algorithms have been invented by the researchers and each of them has its special applications, advantage, and disadvantage. However, choosing the best of them for different applications is a daunting task.

Statistical Learning Theory (SLT)

It is a most suitable, widely adopted ML algorithm for solving manufacturing problems. As per the SL theory, the algorithm training is to educate it (without being explicitly programmed) for selecting a function to establish a relation between inputs and output. Primary emphasis of SLT lies on the “extent of efficiently choosing output for an unseen previous inputs” (Evgeniou et al., 2000). Considering the base of SLT algorithm, other practical algorithms are derived such as NNs, SVMs, and Bayesian modeling (Evgeniou et al., 2002). With minor changes, the SLT can fit well on multiple manufacturing applications; hence it is its major advantage. SLT also permits to work with less number of specimens or samples needed for analysis. A major limitation of SLT is its overfitting with some realization problems (Evgeniou et al., 2002). Additionally, using SLT, the computational complexity is not entirely avoided but eliminated by relaxing design queries (Koltchinskii et al., 2001).

Bayesian networks (BNs)

It is a graphical model which does describe the probability relationship among multiple parameters. BNs can be regarded most renowned applications of SLT. Naïve Bayesian Networks is a modified but modest form of BNs, which is comprised of directed acyclic graphs. BM claims a few advantages, such as limited storage requirements, ability to be used as an incremental learner, and ease to analyze the output. BNs has limitation that the tolerance for superfluous and inter-reliant attributes is minimal (Joshi et al., 2019).

Instance-based learning (IBL)

IBL (Kang & Cho, 2008), also referred to as Memory–Based Reasoning (MBR) (Kang & Cho, 2008) is typically based on k-nearest neighbor (k-NN) classifiers. Though a satisfactory level of accuracy with good stability can be achieved in IBL/MBR techniques, they have not proven the best match for ML integration (Dutt & Gonzalez, 2012). The primary reason for IBL/MBR’s exclusion from their implementation may be, among other things, their difficulty in setting the attribute weight vector in little-known domains.

NN or artificial neural networks

These techniques are enthused by brain’s functionality. Consideirng the fact that brain can intelligently complete many tasks (e.g. vision, speech recognition and analysis), same can be beneficial when dealing with engineering problems when successfully transferred to ML systems (Nilsson & Nilsson, 1996). NN permits an artificial system to accomplish unsupervised, reinforcement and supervised learning tasks (e.g. pattern recognition) by simulating decentralized ‘computation’ of the central nervous system by parallel processing. NN finds its application in multiple manufacturing sectors such as in the semiconductor industry and in diverse problems such as process control. Manallack and Livingstone (Manallack & Livingstone, 1999) suggested that NN algorithms can yield best results in most cases however had the cons of overfitting the fed data, and it is major limitation of NN in real-life applications. Additionally, NN makes the model more complicated and suffers from intolerance concerning missing values and heavy computational time.

SVMs

SVMs were added as novel ML techniques aimed at two-group classification problems. Burbidge et al. (2001) claimed that SVM is a pretty robust and very accurate ML technique which is also well matched for structure–activity relationship analysis. It may be regarded as an applied technique for STL (Wuest et al., 2014). Another fact is that it signifies the decision boundary using a subset of the training examples, known as the support vectors.

Ensemble methods

It combines a weighted committee of learners to solve a classification or regression problem. The committee or ensemble contains several base learners like NNs, trees, or nearest neighbors. At several instances, the base learners can be from the algorithm family, which is referred as a homogeneous ensemble. At the same time, a heterogeneous example is constructed by compounding base learners of different types.

Deep machine learning

This is a new field of ML with the capability to process the data in several processing layer toward highly non-linear and complex feature representations. It is primarily governed by the computer vision and language processing domain but has significant capability for data-driven manufacturing applications. Deep Convolutional Neural Networks (ConvNets) have established their astonishing prediction ability in a wide spectrum of computer vision. Compared to standard NNs, where each neuron from layer n is connected to all neurons in layer (n − 1), a ConvNet is built by numerous filter stages with a restricted view and hence most suited for image, video, and volumetric data. From layer to layer, a ConvNet transforms the output of the previous layer in a higher abstraction by applying non-linear activation.

ML in additive manufacturing

Production industries are creating large volumes of data on the manufacturing line in this 4th industrial revolution epoch, often called as “Industry 4.0,” and AM /3D printing industry is no exemption. AM technologies are crucial elements of Industry 4.0 concept (Alabi, 2018; Xing et al., 2020). AM denotes a group of manufacturing technologies in which materials are joined directly to produce components based on 3D modelling data. AM is a technique of creating physical items from 3D modelling by layering or solidifying a material (Guo & Leu, 2013; Kulkarni et al., 2000). AM has been found successful in developing 3D structures of various materials, as mentioned in Table 1. Any improvements to present AM processes in design, production, and process require significant operators’ and designers’ knowledge (Wang et al., 2020). Design, process, and manufacturing turn out to be further complicated to reap AM’s benefits (Jia et al., 2021; Wang et al., 2020). Any major alteration in the design demands in-depth knowledge of variables and their corresponding effects on the part behaviour. As a result, these objectives frequently entail significant time and/or computation trade-offs.Table 1 Commonly used metals/alloys for commercial use of AM (Frazier, 2014)

In AM parameters, a limited orthogonalization is reported: Boosting the extrusion temperatures, for example, could enhance layer adhesion but also cumulate the stringing. Fine-tuning workflow variables for particular parts or innovative materials may be proven time-consuming and uneconomical (Qi et al., 2019). Component regularity is crucial in industries in which the AM integration is favoured, for example, aerospace, however, deviation in component grade of an equipment is a hurdle to wider acceptance. The administration and evaluation of vast volumes of data and knowledge are all part of these difficulties. Such issues can be alleviated by using ML algorithms to reduce the quantity of human or computational work needed for achieving satisfying outcomes. Baumann et al. (2017) provided the basis for this introduction by mentioning AM is a rapid prototyping (RP) technology but has far more potential than merely prototyping. As a result, there is no need to construct any production tools ahead of time. Furthermore, material waste is decreased because the process is additive rather than subtractive. On the downside, the cost per created product is significantly higher than for mass-produced items; hence it’s best for limited batches (Morrison, 2015). Based on the process used, the quality of additively generated objects can differ and be inferior to that of bulk or tailor machined things. This is usually done in discrete planar layers, although there are other non-planar techniques (Ahlers et al., 2019). AM offers a range of benefits compared to conventional methods, such as adaptation of mass-part parts and increased complexity in macro, meso, and micro scales and complicated components with complicated structures and designs. As a result, AM has generated a high degree of research in industrial and academic environments worldwide in recent years. Despite these advantages, there are some disadvantages, such as a fundamental lack of consistency (Dowling et al., 2020), which has made certification problematic in several industries (Thompson et al., 2016). Another downside is scarcity of proper and adequate acquaintance of design guidelines, despite various pros as discussed above (Thompson et al., 2016). Nevertheless, they are known to produce some side effects such as damage to the skin and breathing problems. Table 2 listed the various chemicals utilized in AM processes, as well as their hazardous and ecological consequences (Huang et al., 2012).Table 2 Hazardous and ecological impact of different chemicals used in AM processes (Huang et al., 2012)

Full size table

In this article, the phrases 3D printing and AM are used interchangeably to describe technologies capable of making three-dimensional physical items based on three-dimensional digital models by layer to layer addon, curative, or other component processing. These technologies suppress traditional methods of extracting material from a structure to produce an anticipated output shape, e.g., drilling, turning, or other subtractive fabrication methods (Beaman et al., 2020). While suitable to all major industries, the aerospace, automotive, and medical sectors have been at the forefront of AM development. The main driver of the aerospace and automotive industries is minimizing component weight without compromising performance. Medical applications of AM have a broader range of motives, while patient customization, enhanced biocompatibility, and performance are all common themes. Consumer products commonly employ AM technique, with mass customization and light-weighting being two prominent motives (Gibson et al., 2021). Apart from the predefined sequence of producing predictions through data modelling, experts are investigating creative and novel techniques to incorporate ML algorithms with the AM. ML techniques, apps, and frameworks are being used by AM professionals to improve its quality, optimise production processes, and reduce costs. With this wide concept in account, ML may be characterised as supervised, unsupervised, or reinforcement learning (Sutton & Barto, 2015).

AM has existed since nearly 1980, but it was only recently that the revolution gained popularity and budgets after the key patent expired, notably for consumer-grade 3D printing technology. ML has been studied since around 1960 (Widrow & Lehr, 1990) and is based on natural notions such as the perceptron (Rosenblatt, 1958). Still, it has lately gained traction due to outstanding results produced by research organizations and commercial enterprises like Google. Different studies are now being specialized to additive production research, which can address fundamentals and complex challenges through ML approaches. Research on ML has been conducted at this age, and it has been discovered that this is the era of database creation since a big quantity of knowledge is generated everyday across numerous networks, production, online networks, pharmaceuticals, aviation, 3D printing, automobiles, and telecommunications are just a few examples.

ML is a discipline of AI that enables a device to train from a dataset obtained from various sources and perform intelligent activities, such as conducting complex processes by gathering accessible data, rather than following a pre-programmed technique (Craig et al., xxxx). ML mainly deals with massive data quantities. The sector has inevitably resorted to master learning approaches because of the vast amount of data collected throughout the AM build process. As the supply sector increases, the difficulty of constructing industrial additive machinery or 3D entry-level desktop printing devices to produce a large quantity of bug-free components or finished products that satisfy high failure criteria also constitutes his challenges. Parameter variations during construction were recently revealed as factors for the creation of defects in the AM sector. This could help the AM industry to identify potential areas of trouble with the finished product of the equipment and even perhaps produce more strong building procedures and cost-cutting strategies. Computer vision, prediction, and information retrieval are three major components that find their direct applications in AM processes. With the implementation of cutting-edge techniques in graphics, hardware has allowed their in-depth research, allowing quick optimization of ML algorithms on massive data (Shinde & Shah, 2018). As a result of these advancements, ML solutions can now be used in AM for better productivity. As a result, the focus of this section is on AM technology and how it might be used in conjunction with ML.

Figure 5a depicts the ML taxonomy and the corresponding implementations in AM. A well-known ML approach is Artificial Neural Network (ANN). Its prominence has grown in tandem with the advancement of processing power, notably with the adoption of GPUs and their simultaneous computational power for easy mathematical analysis. An ANN is comprised of different layers, each containing a set of fundamental neurons (Fig. 5b). The perceptron, designed by Rosenblatt in 1958, was one of the first studies on ANN (Rosenblatt, 1958). The Heaviside step function serves as an activation function, calculates response by input values, and defines the neuron by its own weight(s) in that context. A layer’s output is the sum of all of its neurons. During the training phase of a multilayer ANN, backpropagation may be employed for increasing each neuron’s weight. It can be obtained by manipulating loss function’s gradient, representing a distance between the ANN’s output and the training data, and then propagating the errors backward. The subsequent network may be a (classical) feedforward neural network (FNN) if the network layers are ordered sequentially, i.e., if no backward connection exists between separate layers; otherwise a recurrent neural network (RNN). When training RNNs, long short-term memory networks (LSTMs) deal with the issue of disappearing gradients in the abovementioned backpropagation process. At the same time, deep learning is relied on an ANN with multiple layers, including hidden layers (i.e., layers that are neither input nor output), and also convolutional and pooling layers that are only regionally connected (i.e., not all neurons in one layer are linked to neurons in the next layer). Neurons may be arranged in layers. By layering and connecting these levels with neighboring layers, convolution neural networks (CNNs) are constructed. In the existing AM industry, variability in manufactured components’ functionality that relies significantly on numerous processing variables, such as printing speed and film thickness, is a key hurdle. Various review articles have looked into the relationship between process, structure, and property (Kumar & Kar, 2021; Kumar & Kishor, 2021; Singh et al., 2017). Experimenting or running high-fidelity simulations is one option to deal with this problem, acquire reliable data, and aid in adjusting processing parameters. Still, both are time intensive or pricey, or both. The use of in situ monitoring systems is another way to ensure part quality and process dependability, but an effective defect detection approach based on in situ data such as pictures is required. A robust and accurate data processing and data extraction tool is required in both directions. These challenges are being sorted out by ML, a branch of AI. With a trustworthy dataset, ML algorithms can acquire information from the training set and create conclusions relying on that information. On another side, trained ML algorithms might have predictions and identify the ideal operational settings. On the other side, ML algorithms use in-situ data to detect defects in real-time. More ML applications, such as geometric variation control, cost estimate, and quality analysis, have been documented in recent studies (Jin et al., 2020; Razvi et al., 2019). In general, ML applications can be considered a type of data processing. Therefore ML integration can be regarded as an important part of Industry 4.0.

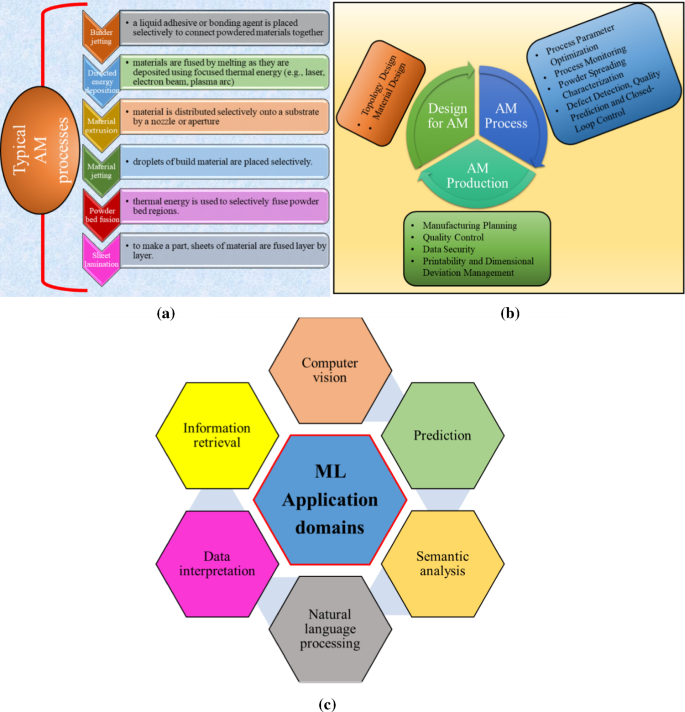

As per ASTM F42 (ISO & ASTM5, 2900 – xxxx), various AM methods can be classified into major groups, as shown in Fig. 6a. Materials jetting (Wang et al., 2018a) and stereo-lithography (Lee et al., 2013) are also the classifications of AM processes; however, they will not be discussed here in detail. To better comprehend the advantages of ML in manufacturing processes, three broad categories are proposed and shown in Fig. 6b. The aim here is to demonstrate how these groups affect successful AM integration and information protection planning and scheduling. These processes will be dealt with in detail in the forthcoming section. As complied by Shinde and Shah (2018), major application domains of ML technique can be grouped as computer vision, prediction, semantic analysis, natural language processing, and information retrieval (Fig. 6c).

Machine learning in design for additive manufacturing (DfAM)

DfAM is a subset of Design for Manufacturing and Assembly (DfMA), although it differs from conventional DfMA in several ways. Because AM can produce complicated structures that are impossible to fabricate using traditional manufacturing processes, designers are rethinking the conventional DfMA process used in AM (Thompson et al., 2016). AM also avoids the assembly phase because it may fabricate the entire product in a single step. DfAM (Ponche et al., 2014; Thompson et al., 2016) is a novel word that considers the unique possibilities of AM as well as the differences between traditional manufacturing and AM approaches. The processing of DfAM comes under the following categories:

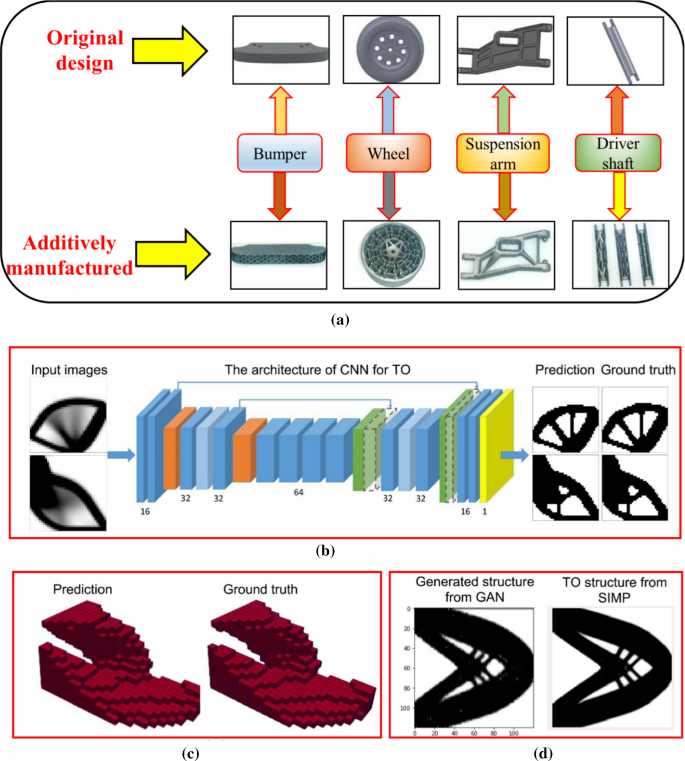

Topology design

Topology Optimization (TO) (Bendsøe, 1999) is a method for designing structures that optimizes material distribution inside a design region whilst taking into account specific stresses and restraints.TO procedures typically include multiple designs and prototyping repetitions, making them technologically intensive, especially for mass scale and difficult to made components. Once the ML models have been correctly learned, they could provide favorable ideas without starting over, allowing the ML-centric technique to complement the classic TO approach. Unfortunately, there is still a paucity of research on employing ML for topology design for AM applications. Yao et al. (2017) presented an integrated ML methodology for AM design feature suggestions while the designing phase used a clustering algorithm. Figure 7a shows a typical case study in this context. In contrast, this work used no TO techniques and instead substituted the heavy pieces with lightweight components retrieved from a set in the initial model. The CNN was utilized to skill the in-between topologies acquired using typical TO techniques in order to solve a mechanical malfunction. The TO algorithm was interrupted after only a few iterations to anticipate the optimal designs at an interim phase. With some unusual pixel-wise tweaks, the learned CNN algorithm may anticipate eventual topology optimisation by nearly twenty times quicker than standard simplified isotropic material with penalization (SIMP). Developed network may be employed for handling heat flux issues, and when applying thresholding, and exceeded SIMP in terms of performance as well as numerical precision. This establishes CNN model’s broad generalizability without requiring knowledge of the problem’s nature. This technology was expanded by Banga et al. (2018) to construct 3D structures. When the FEM-based SIMP technique was used exclusively, it could anticipate the final designs fairly soon, with an average numerical precision of 96.2% and a savings in time of 40% once trained. The generative adversarial network (GAN) can predict the optimized structures without SIMP iterations. GAN is a generative programming approach that incorporates advanced deep learning techniques (Goodfellow et al., 2014). The well-learned GAN model may create a huge sum of unrecognized design having intricate geometry which fit design specifications based on the limitations and variables. Figure 7b-d shows several examples of these studies. All of the training data was generated via the usual TO approach, which should be stressed. As a result, while ML cannot completely replace the classic TO technique, it can be utilized to reduce the number of iterations and accelerate the optimization method. Furthermore, the ML-centered TO technique might be utilised for a speedy, approximate forecast of initial information too. But at the other side, the above-mentioned undertakings have yet to be conducted in additive manufacturing.

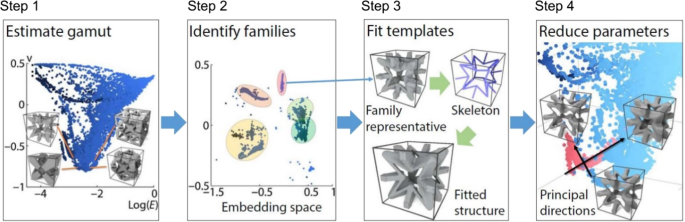

Material design

Materials experts and researchers have created a variety of metamaterials, which are composites with distinguishable properties. Manually developing metamaterials using the Edisonian method is extremely difficult and laborious. The synthesis of metamaterials can be considerably accelerated using modern ML approaches (Gu et al., 2018). Thanks to recent developments in ML, material specialists and researchers can now predict material properties to invent new metamaterials. Furthermore, as several researchers have proved, AM techniques can materialize previously impossible ideas to construct. The synergistic potential of cutting-edge ML in materials and AM techniques is largely untapped. Chen et al. (2018) devised a fully automated method for identifying ideal metamaterial designs, which were then experimentally confirmed using the PEBA2301 elastic material and a selective laser sintering (SLS) procedure (Fig. 8).

Given the intended elastic material parameters, such as Young’s modulus, Poisson’s ratio, and shear modulus, the system is expected to produce a customized microstructure that meets the specification using ML technique. Gu et al. (2018) constructed 100,000 microstructures using three kinds of unit cells on an 8 by 8 lattice structure, accounting for fewer than 10–8% of all possible combinations. Convolutional neural networks (CNN) were then utilized for training a database that included mechanical parameters calculated using the FEM, yielding innovative microstructural patterns for a composite metamaterial twice strong and forty times hard. The multi-material jetting AM method was used to validate their ideas (Fig. 9). One notable difference is that FEM simulation required around five days to calculate mechanical characteristics. Still, CNN only took ten hours to learn and a few seconds to generate the output.

Machine learning for additive manufacturing processes

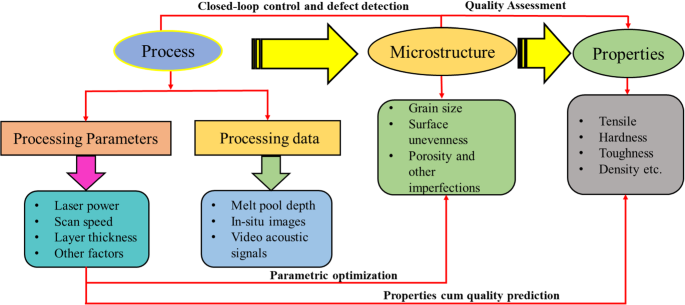

ML is a tool for data processing. The data that can be evaluated and used for the Process- Structure–Property (PSP) relation chain are presented in Fig. 10. The term “process” is separated into two words, “processing parameter” and “resultant processing data,” in common PSP connections. It is possible to discriminate between data accessible beforehand and after processing.

- Extruder temperature in ME, laser power in L-PBF, printing pace, and film thickness all have a significant effect on printed items and therefore influence their reliability and productivity;

- The intended structure has a major impact on production charges and geometric variation in produced items;

- The obtained in situ images and acoustic emissions (AE) can be used for the identification and type of problems in real-time by surveillance systems.

As a consequence, ML models trained on a given dataset comprising a minimum of two categories of linked data in PSP network will be able to derive conclusions from this input. It is the most common approach to ML model implementation.

Process parameter optimization

Developers will not know the grade of a part developed with a particular set of processing parameters until it is manufactured. Consequently, several steps must be taken to ensure product performance, such as printing specimens and validating their efficiency, making the design costly, time-intensive, as well as unpredictable. Therefore, a direct link between governing variables and product performance can be highly advantageous. Tests and computations are valuable tools for establishing a connection, however, getting optimal variables is difficult when multiple input parameters are tangled. ML techniques can be utilized in the form of substitute models to enhance operational efficiencies (Wang et al., 2018b, 2019). To additively build new materials, process parameter development and optimization have traditionally been carried out using the design of experiments or simulation methods. However, in the case of metal AM, developing an experimental strategy often necessitates a lengthy and costly investigation process (Wang et al., 2018b, 2019).

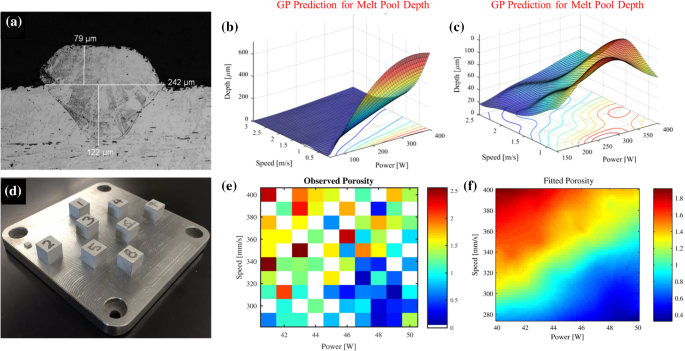

A physical-based simulation can be used to demonstrate the theoretical underpinnings for the production of various features during handlings. However, macro-scale models, such as FEM, may have inaccuracies with experimental outcomes owing to decreased assumptions. Single tracks or a few tracks and layers are frequently the subjects of increasingly more advanced approaches, such as computational fluid dynamics. This makes predicting the mechanical characteristics of pieces on a macro scale or in a continuum complex. As a result, several researchers have looked at the potential of using ML to overcome the issues mentioned above in metal AM process optimization, as shown in Table 3. ML was found to be primarily used as a link to two levels of quality criteria for significant process parameters: mesoscale and macroscale. Moreover, some scholars have employed process maps as a means of finding process frames. These process maps can be a valuable tool for further analysis. Single routes are the main construction elements in the mesoscale of high-energy AM. Topology of the weld pool can have a substantial influence on the finished quality of product, for example, shape, continuation and consistency. Because of insufficient empirical observations, the powder-based and wire-based DED (wire-based) processes were predicted using a multi-layer perceptron (MLP). The process variables were thus inexorably linked to the melt pool morphologies. It thus indicates a particular geometry can be attained by adjusting parametric combinations in the opposite direction. In the 3D response maps to melt pool depths vs. process settings, Tapia et al. (2017) used the Gaussian Process-base (GP) substitute model as shown in Fig. 11a-c. As a result, parametric combinations can be defined to eliminate formation of keyhole melting. A mixture of one empirical dataset and two additional literature data sets have been used for the 139 data sets. Several ad hoc filters have been implemented to decrease abnormalities, resulting in 96 valid data points. Their 6.023 μm preview error is acceptable since it was equivalent to data gathering faults. The porosity of AM-built parts is another primary concern at the mesoscale. Total density is the prime purpose in metal AM since the mechanical behavior of components is heavily affected by porosity, especially by fatigue. Multi-Layer Perceptron (MLP) can depict complex non-linear interactions with little insight into how predictions are produced. Moreover, the unsafe nature of the prediction outputs may be usually evaluated, but when the same amount of input information is given, it is substantially costly than the MLP. As a result, MLP and GP combined with Bayesian approaches were used to estimate porosity in selective laser melting (SLM) based on combinations of process factors, as shown in Fig. 11(d)–(e). The PLA sample open porosity during SLS processing was estimated using SVM and MLP algorithms.Table 3 ML approaches for optimizing parameters in the AM process (Wang et al., 2020)

Full size table

Multi-gene Genetic Programming (MGGP), though limited by the system’s generalization, is a cutting-edge technique that may robotically develop the shape. The printing of a 58 wt% HA + 42 wt% PA powder mix utilizing SLS was done to achieve the necessary open porosity values through adjustment of process parameters. Ensemble-based MGGP with a better generalizing capacity.

ML technique can also be used to investigate the macro-scale characteristics of AM-built objects. A fuzzy inference system based on adaptive systems (ANFIS) can usually only handle partial values. As a result, since there are so many unknowns in the fatigue process, it’s beneficial for analyzing fatigue attributes. Zhang et al. (2019) acquired 139 SS316 L fatigue data that had been manufactured under 18 different treatment configurations on the same SLM equipment. The ANFIS was effectively used with the ‘process based’ model and the ‘property model’ to predict high-cyclical fatigue with an average root squared error of 11–16%. However, when they used the training set with the 66 data points to estimate fatigue life, the performance of their algorithms was reduced, owing to the variability of machine-to-mechanical systems. It is therefore advised to use both empirical and bibliographical inputs in model training in order to increase its ability for generalization. Wang et al. (2018b) argued that examining the top surface morphology might assist in narrowing down the electron beam melting process window (EBM). SVM, for example, works well when the proximity between classes is apparent, but it is simple to overfit. As a result, Aoyagi et al. (2019) proposed a straightforward method for constructing EBM flowcharts using only 11 datasets. It should also be noted that SVM was used in this study solely to fit the data to identify the decision boundaries. Because the training dataset was so small, assigning a training dataset to evaluate the model’s accuracy was problematic. Recurrent neural networks (RNN) are being used to anticipate time series. To determine the high thermal history of complicated components of the DED process as established by Mozaffar et al. (2018), RNN was therefore utilized for training FEM data in view of the time dependence of the inputs. In addition, both MLP and SVM have tried to forecast the thin wall deposits for DED.

The study focused on the mechanical characteristics of macroscopic dimensions in the AM extrusion material process. For process parameters that had been carefully studied, FDM includes the thickness layer, temperature, and structure guidance. Here, the MLP is utmost widely employed methodology. An adequately trained MLP is preferable to the precision and prediction of the system’s nonlinear data. The usage of the compressive strength, wear rate, elasticity dynamics, creep, and restorative properties were thoroughly assessed in estimating material tensile parameters of PLA and PC-APS materials.

Process monitoring

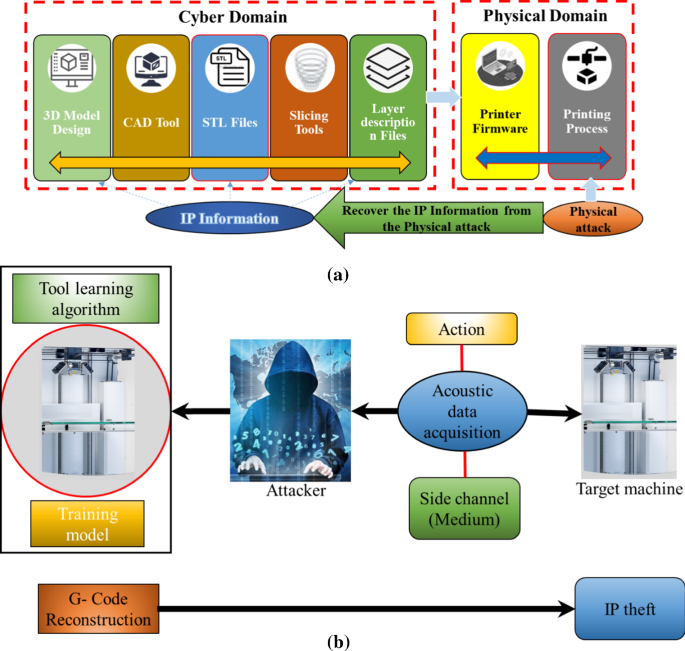

While parameter optimization can aid in process predictability, it cannot totally eradicate failures (Kwon et al., 2018). Process monitoring approaches that can detect build failures and defects are required since print problems account for a considerable portion of the cost of AM parts. Various ML solutions have attempted to handle this challenge, and they are categorized into two groups depending on input data type: optical and acoustic. The most frequently applied monitoring solutions are those that utilize information from digital, high-speed, or infrared cameras. The melt pool is a typical target in PBF operations, at which majority of monitoring investigation are oriented. Kwon et al. ( 2018) used melt pool thermal data to train CNN-based software for distinguishing high to low-quality constructions having failure rate less than 1.1%, potentially saving time and money. Zhang et al. (2020b) uncovered that integrating melt pool, plume, and spatter data to categorize component quality yields best results. A form of NN known as a long–short term memory network has been determined much better prediction (Zhang et al., 2021). Other AM techniques, such as binder jetting and material extrusion, have also benefited from optical monitoring. Gunther et al. (2020) employed an optical tool for analyzing defects in binder jet parts. The techniques used here were not disclosed, and there was no discussion of the model’s accuracy. Optical tracking has been used in material extrusion to detect defects in real-time. Wu et al. (2016b) employed a classification method for detecting infill print faults in material extrusion, giving them more confidence in final product’s quality. The study had a 95% accuracy rate; however, it didn’t consider other essential quality metrics like precision and recall. Li et al. (2021) determined dimensional deviation with zero mean error and a standard deviation of 0.02 mm using in situ optical monitoring of a material extrusion process. Sun et al. (2021) employed adaptive fault detection and root-cause analysis using moving window KPCA and information geometric causal inference. They noted that this scheme had good performance in reducing the faulty false alarms and missed detection rates and locating fault root-cause. Said et al. (2020) added a new Fault Detection method applicable to the process monitoring using Kernel partial least squares (KPLS) in static and dynamic forms. They noted that results obtained from reduced kernel partial least squares (KRPLS) have demonstrated the efficiency of the developed technique in terms of false alarm rate, good detection rate and computation time, compared with the conventional fault detection KPLS. Lee et al. (2020) proposed Kernel principal component analysis and found it can effectively monitor the tool wear. The proposed method can effectively integrate multi-sensor information and synthesize the data to estimate the state of the process.

A more recent and comparatively cheaper means to monitor the build throughout the printing process is called acoustic monitoring. These methods depend on acoustic signals related to part porosity and melt states in PBF processes and material extrusion process failures. In contrast to optical monitoring, acoustic monitoring systems have cheaper sensors. The ML algorithms used range from supervised CNNs to clustering solutions. Acoustic monitoring effectively highlights problem builds with reduced requirement for post-print examination and testing, with confidence levels of up to 89% for porosity classification and 94% for melt-pool-related defects. Extrusion procedures have also been subjected to acoustic monitoring. Wu et al. (Wu et al., 2015b) employed acoustic monitoring and an SVM classifier to assess if the extruder was pushing out material with 100% accuracy. The SVM could detect extruder obstructions with 92% accuracy (normal, semi-blocked, or blocked).

Powder spreading characterization

The degree of consistency for powder distribution in the PBF process is critical to quality of final products. Improper powder distribution can cause a variety of problems, including warping and swelling, which can cause the entire build to fail. Re-coater striking curled-up or humped components, re-coater dragging impurities, re-coater blade damage, debris over powder bed are all examples of powder spreading problems. Furthermore, eliminating the requirement for human-made detectors for specific abnormalities is highly desirable. To that purpose, a mechanism for detecting and classifying powder spreading faults autonomously while build was introduced. Scime and Beuth used modern computer vision techniques such as k-means clustering (Scime & Beuth, 2018a) and multi-scale CNN (Scime & Beuth, 2018b) to learn the algorithm accurately. They also categorize powder-bed picture patches into seven kinds using photos recorded throughout the SLM process. This technology also allows for in-process repair of flaws in the AM process when a feedback control system is used.

Defect detection, quality prediction, and closed-loop control

In situ monitoring devices have advanced to the point that real-time data can be collected for defect detection and closed-loop control in AM. ML models may employ actual information like spectroscopy, images, AE, and computed tomography (CT) in a variety of ways, as shown below.

- Identify the information with error (potentially with defect categories) or not by experimental tests or human expertise. Then, train supervised learning models for detecting defects and performance projection in real-time, which is a frequent implementation of ML classification techniques.

- Execute cluster assessment with unsupervised learning approaches for abnormal data to be clustered and detect faults without labelling.

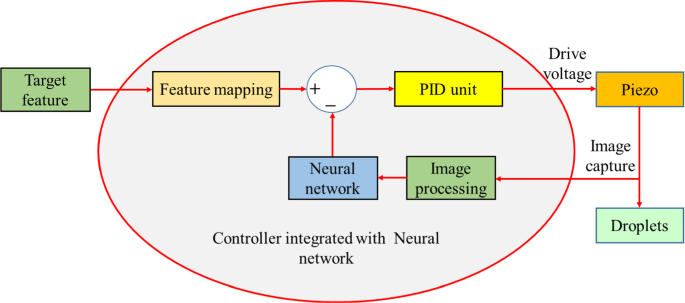

- Build the ML regression models utilizing data from some real-time adjustable process conditions to modify these processing parameters in real-time. The third way is illustrated by voltage level control in the MJ process. Their process control structure is made up of three basic components, as indicated in Fig. 12. A charge-coupled device (CCD) camera captures the dynamic photos of the droplet first. Secondly, the images are used to extract four droplet properties (satellite, ligament, quantity, and pace), which are then coupled with the current–voltage to create a neural network (NN) ML algorithm. Finally, the trained ML model is used to determine the appropriate voltage level and send it to the voltage modification system, which regulates the droplet jetting pattern.

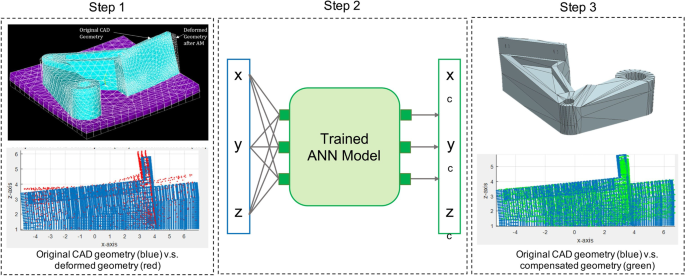

Geometric deviation control

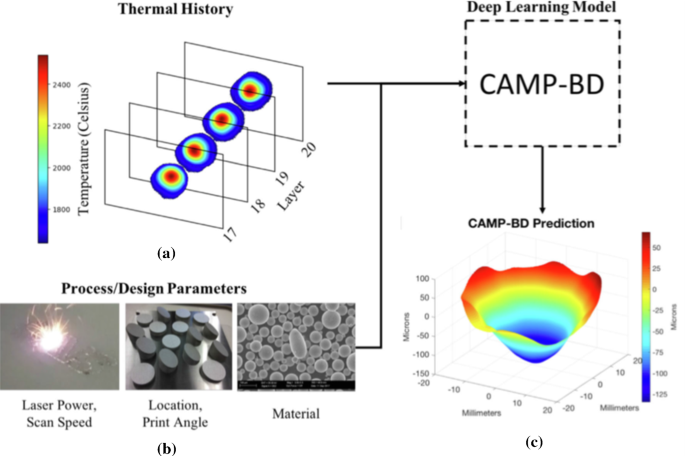

AM parts commonly have low geometric precision and surface integrity(Grasso, 2017). These geometric flaws obstruct AM’s use in a variety of fields, including aerospace and pharma. Under such scenario, ML models can recognize geometric faults, quantify the deviation, and make recommendations for correcting the flaws. As shown in Fig. 13, Francis et al.(Francis & Letters, 2019) built a geometric error compensation framework for the L-PBF process using a convolutional neural network (CNN) ML model. The trained ML model can anticipate distortion by considering thermal data and a few process variables for feeding and distortion as an outcome, which is then fed back into the CAD model for error detection and correction. The geometric accuracy of items manufactured using the adjusted CAD model will be greatly enhanced due to this method.

Cost estimation

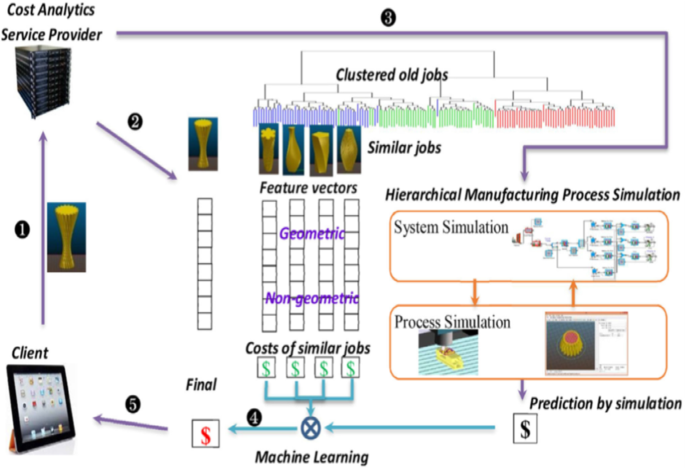

Manufacturers, clients, and other supply chain stakeholders need to know how much printing costs and how long it takes to print something. Although the dimensions of the proposed shape can be used to estimate them, an additional precise and effective cost approximation technique is still necessary. Chan et al. (2018) recently published an application of cost estimation. The cost estimation framework they proposed is shown in Fig. 14:

- A customer presents a production order that includes a three-dimensional model;

- The input vector is formed from the three-dimensional model and fed into trained ML algorithms for cost estimation using clustering analysis based on similar workloads.

- If such customer requests it or data required for training the ML algorithm is insufficient, the 3D model will be delivered to modeling techniques to estimate costs, which will be used as training source for ML algorithms.

- After integrating the ML forecasts, the gross expected cost is calculated;

- The customer gets the final estimate.

Machine learning for additive manufacturing production

Additive manufacturing planning